Factor Analysis of Information RiskFactor Analysis of Information Risk (FAIR) is an analysis method that helps a risk manager

understand the factors that contribute to risk, the probability of threat occurrence, and an

estimation of potential losses. In the FAIR methodology, there are six types of loss:

• Productivity Loss of productivity caused by the incident

• Response Cost expended in incident response

• Replacement Expense required to rebuild or replace an asset

• Fines and judgments All forms of legal costs resulting from the incident

• Competitive advantage Loss of business to other organizations

• Reputation Loss of goodwill and future business

FAIR also focuses on the concept of asset value and liability. For example, a customer list is

an asset because the organization can reach its customers to solicit new business; however,

the customer list is also a liability because of the impact on the organization if the customer

list is obtained by an unauthorized person.

FAIR guides a risk manager through an analysis of threat agents and the different ways in

which a threat agent acts upon an asset:

• Access Threat agent reads data without authorization

• Misuse Threat agent uses an asset differently from its intended usage

• Disclose Threat agent shares data with other unauthorized parties

• Modify Threat agent modifies asset

• Deny use Threat agent prevents legitimate subjects from accessing assets

FAIR is considered to be complementary to risk management methodologies such as NIST

SP 800-30 and ISO/IEC 27005.

Asset Identification

After a risk assessment’s scope has been determined, the initial step in a risk assessment is

the identification of assets and a determination of each asset’s value. In a typical

information risk assessment, assets will consist of various types of information (including

intellectual property, internal operations, and personal information), the information

systems that support and protect those information assets, and the business processes that

are supported by these systems.

Hardware Assets

Hardware assets may include server and network hardware, user workstations, office

equipment such as printers and scanners, and Wi-Fi access points. Depending on the scope

of the risk assessment, assets in storage and replacement components may also be

included.

Because hardware assets are installed, moved, and eventually retired, it is important to

verify the information in the asset inventory periodically by physically verifying

the existence of the physical assets. Depending upon the value and sensitivity of systems

and data, this inventory “true-up” may be performed as often as monthly or as seldom as

annually. Discrepancies in actual inventory must be investigated to verify that assets have

not been stolen or moved without authorization.

Subsystem and Software Assets

Software applications such as software development tools, drawing tools, security scanning

tools, and subsystems such as application servers and database management systems are

all considered assets. Like physical assets, these assets often have tangible value and

should be periodically inventoried.

Cloud-Based Information Assets

One significant challenge related to information assets lies in the nature of cloud services

and how they work. An organization may have a significant portion of its information

assets stored by other organizations in their cloud-based services. Unless an organization

has exceedingly good business records, some of these assets will be overlooked, mainly

because of the ways in which cloud services work. It’s easy to sign up for a zero-cost or

low-cost service and immediately begin uploading business information to the service.

Unless the organization has advanced tools such as a cloud access security broker (CASB),

it will be next to impossible for an organization to know all of the cloud-based services that

are used.

Virtual Assets

Virtualization technology, which enables an organization to employ multiple, separate

operating systems to run on one server, is a popular practice for organizations, whether on

their own hardware servers located in their own data centers or in hosting facilities.

Organizations employing infrastructure as a service (IaaS) are also employing

virtualization technology.

Information Assets

Information assets are less tangible than hardware assets, as they are not easily observed.

Information assets take many forms:

• Personal information Most organizations store information about people, whether they

are employees, customers, constituents, beneficiaries, or citizens. This data may include

sensitive information such as contact information and personal details, transactions, order

histories, and other items.

• Intellectual property This type of information can take the form of trade secrets,

source code, product designs, policies and standards, and marketing collateral.

• Business operations This generally includes merger and acquisition information and

other types of business processes and records.

• Virtual machines Most organizations are moving their business applications to the

cloud, thereby eliminating the need to purchase hardware. Organizations that use IaaS

have virtual operating systems, which are another form of information. Even though these

operating systems are not purchased but instead are rented, there is nonetheless an asset

perspective: they take time to build and configure and therefore have a replacement cost.

The value of assets is discussed more fully later in this section.

Asset Classification

Asset classification is an activity whereby an organization assigns an asset to a category

that represents usage or risk. The purpose of asset classification is to determine, for each

asset, its level of criticality to the organization. In an organization with a formal privacy

program, asset classification will include one or more classifications for assets related to

personal information.

Criticality can be related to information sensitivity. For instance, a database of customers’

personal information that includes contact and payment information would be considered

highly sensitive and, in the event of compromise, could result in significant impact to

present and future business operations.

Criticality can also be related to operational dependency. For example, a database of virtual

server images may be considered highly critical. If an organization’s server images were to

be compromised or lost, this could adversely affect the organization’s ability to continue its

information processing operations.

These and other measures of criticality form the basis for information protection, system

redundancy and resilience, business continuity planning, and access management. Scarce

resources in the form of information protection and resilience need to be allocated to the

assets that require it the most. It doesn’t usually make sense to protect all assets to the

same degree—the more valuable, sensitive, and critical assets should be protected more

securely than those that are less valuable and critical.

Data Classification

Data classification is a process whereby different sets and collections of data in an

organization are analyzed for various types of sensitivity, criticality, integrity, and value.

There are different ways to understand these characteristics. These are some examples:

• Personal information This type of information is most commonly associated with

natural persons. Examples include personal contact information, employment records,

medical records, and personal financial data such as credit card and bank account numbers.

• Sensitive information Information other than personal information can also be

considered sensitive, including intellectual property, nonpublished financial records,

merger and acquisition information, and strategic plans.

• Operational criticality In this category, information must be available at all times, or

perhaps the information is related to some factors of business resilience. Examples of

information in this category include virtual server images, incident response procedures,

and business continuity procedures. Corruption or loss of this type of information may

have a significant impact on ongoing business operations.

• Accuracy or integrity Information in this category is required to be highly accurate. If

altered, the organization could suffer significant financial or reputational harm. Types of

information include exchange rate tables, product or service inventory data, machine

calibration data, and price lists. Corruption or loss of this type of information impacts

business operations by causing incomplete or erroneous transactions.

• Monetary value This information may be more easily monetized by intruders who steal

it. Types of information include credit card numbers, bank account numbers, gift

certificates or cards, and discount or promotion codes. Loss of this type of information may

result in direct financial losses.

Most organizations store information that falls into all of these categories, with degrees of

importance within them. Although this may result in a complex matrix of information types

and degrees of importance or value, the most successful organizations will build a fairly

simple data classification scheme. For instance, an organization may develop four levels of

information classification, such as Public, Confidential, Regulated, and Secret.

The Common Needs for Privacy, Confidentiality, Integrity, and Availability

We’ve looked at what information is, and what business is; we’ve looked at how businesses

need information to make decisions and how they need more information to know that their

decisions are being carried out effectively. Now it’s time to look at key characteristics of

information that directly relate to keeping it safe, secure, and reliable. Let’s define these

characteristics now, but we’ll do this from simplest to most complex in terms of the ideas

that they represent.

And in doing so, we’re going to have to get personal.

Privacy

For a little more than 200 years, Western societies have had a clearly established legal

and ethical concept of privacy as a core tenet of how they want their societies to work.

Privacy, which refers to a person (or a business), is the freedom from intrusion by others into

one’s own life, place of residence or work, or relationships with others. Privacy means that

you have the freedom to choose who can come into these aspects of your life and what they

can know about you. Privacy is an element of common law, or the body of unwritten legal

principles that are just as enforceable by the courts as the written laws are in many countries.

It starts with the privacy rights and needs of one person and grows to treat families, other

organizations, and other relationships (personal, professional, or social) as being free from

unwarranted intrusion.

Businesses create and use company confidential or proprietary information almost every day.

Both terms declare that the business owns this information; the company has paid the costs

to develop this information (such as the salaries of the people who thought up these ideas or

wrote them down in useful form for the company), which represents part of the business’s

competitive advantage over its competitors. Both terms reflect the legitimate business need

to keep some data and ideas private to the business.

UNWARRANTED?

Note the dual meaning of this very important term to you as an SSCP and as a citizen. An

unwarranted action is one that is either:

(1) Without a warrant, a court order, or other due process of law that allows the action to

take place

(2) Has no reasonable cause; serves no reasonable purpose; or exceeds the common sense of

what is right and proper

Staying in a hotel room demonstrates this concept of privacy. You are renting the use of that

room on a nightly basis; the only things that belong to you are what you bring in with you.

Those personal possessions and the information, books, papers, and files on your phone or

laptop or thumb drives are your personal property and by law are under your control. No one

has permission or legal authority to enter your hotel room without your consent. Of course,

when you signed for the room, you signed a contract that gave your express permission to

designated hotel staff to enter the room for regular or emergency maintenance, cleaning, and

inspection. This agreement does not give the hotel permission to search through your luggage

or your belongings, or to make copies or records of what they see in your room. Whether it is

just you in the room, or whether a friend, family member, or associate visits or stays with

you, is a private matter, unless of course your contract with the hotel says “no guests” and

you are paying the single occupancy rate. The hotel room is a private space in this regard—

one in which you can choose who can enter or observe.

This is key: privacy can be enforced both by contracts and by law.

Privacy: In Law, in Practice, in Information Systems

Public law enforces these principles. Laws such as the Fourth and Fifth Amendments to the

U.S. Constitution, for example, address the first three, whereas the Privacy Act of 1974

created restrictions on how government could share with others what it knew about its

citizens (and even limited sharing of such information within government). Medical codes of

practice and the laws that reflect them encourage data sharing to help health professionals

detect a potential new disease epidemic, but they also require that personally identifiable

information in the clinical data be removed or anonymized to protect individual patients.

The European Union has enacted a series of policies and laws designed to protect individual

privacy as businesses and governments exchange data about people, transactions, and

themselves. The latest of these, General Data Protection Regulation 2016/679 (GDPR), is a

law that applies to all persons, businesses, or organizations doing anything involving the data

related to an EU person. The GDPR’s requirements meant that by May 2018, businesses had

to change the ways that they collected, used, stored, and shared information about anyone

who contacted them (such as by browsing to their website); they also had to notify such users

about the changes and gain their informed consent to such use. Many news and infotainment

sites hosted in the United States could not serve EU persons until they implemented changes

to become GDPR compliant.

GDPR also codified a number of important roles regarding individuals and organizations

involved in the creation and use of protected data (that is, data related to privacy):

Subject: the person described or identified by the data

Processor: a person or organization that creates, modifies, uses, destroys, or shares the

protected data in any way

Controller: the person or organization who directs such processing, and who has ultimate

data protection responsibility for it

Custodian: a person or organization who stores the data and makes it available when

directed by the controller

Data protection officer: a specified officer or individual of an organization who acts as the

focal point for all data protection compliance issues

Even in countries not subject to GDPR, organizations are finding it prudent to use these roles

or create comparable ones, in order to better manage and be accountable for protecting

privacy-related data. And organizations anywhere, operating under their local laws and

regulations, do need to be aware that data localization or data residency laws in many

countries may have specific data protection, storage, and processing requirements for data

pertaining to individuals who are residents or citizens of those countries.

In some jurisdictions and cultures, we speak of an inherent right to privacy; in others, we

speak to a requirement that people and organizations protect the information that they

gather, use, and maintain when that data is about another person or entity. In both cases, the

right or requirement exists to prevent harm to the individual. Loss of control over

information about you, or about your business, can cause you grave if not irreparable harm.

It’s beyond the scope of this book and the SSCP exam to go into much depth about the

GDPR’s specific requirements, or to compare its unified approach to the collection of federal,

state, and local laws, ordinances, and regulations in the United States. Regardless, it’s

important that as an SSCP you become aware of the expectations in law and practice for the

communities that your business serves in regard to protecting the confidentiality of data you

hold about individuals you deal with.

Private and Public Places

Part of the concept of privacy is connected to the reasonable expectation that other people

can see and hear what you are doing, where you are (or where you are going), and who might

be with you. It’s easy to see this in examples; walking along a sidewalk, you have every

reason to think that other people can see you, whether they are out on the sidewalk as well

or looking out the windows of their homes and offices, or from passing vehicles. The converse

is that when out on that public sidewalk, out in the open spaces of the town or city, you have

no reason to believe that you are not visible to others. This helps us differentiate between

public places and private places:

Public places are areas or spaces in which anyone and everyone can see, hear, or notice the

presence of other people, and observe what they are doing, intentionally or unintentionally.

There is little to no degree of control as to who can be in a public place. A city park is a public

place.

Private places are areas or spaces in which, by contrast, you as owner (or the person

responsible for that space) have every reason to believe that you can control who can enter,

participate in activities with you (or just be a bystander), observe what you are doing, or hear

what you are saying. You choose to share what you do in a private space with the people you

choose to allow into that space with you. By law, this is your reasonable expectation of

privacy, because it is “your” space, and the people you allow to share that space with you

share in that reasonable expectation of privacy.

Your home or residence is perhaps the prime example of what we assume is a private place.

Typically, business locations can be considered private in that the owners or managing

directors of the business set policies as to whom they will allow into their place of business.

Customers might be allowed onto the sales floor of a retail establishment but not into the

warehouse or service areas, for example. In a business location, however, it is the business

owner (or its managing directors) who have the most compelling reasonable expectation of

privacy, in law and in practice. Employees, clients, or visitors cannot expect that what they

say or do in that business location (or on its IT systems) is private to them, and not “in plain

sight” to the business. As an employee, you can reasonably expect that your pockets or lunch

bag are private to you, but the emails you write or the phone calls you make while on

company premises are not necessarily private to you. This is not clear-cut in law or practice,

however; courts and legislatures are still working to clarify this.

The pervasive use of the Internet and the World Wide Web, and the convergence of personal

information technologies, communications and entertainment, and computing, have blurred

these lines. Your smart watch or personal fitness tracker uplinks your location and exercise

information to a website, and you’ve set the parameters of that tracker and your Web

account to share with other users, even ones you don’t know personally. Are you doing your

workouts today in a public or private place? Is the data your smart watch collects and uploads

public or private data?

“Facebook-friendly” is a phrase we increasingly see in corporate policies and codes of conduct

these days. The surfing of one’s social media posts, and even one’s browsing histories, has

become a standard and important element of prescreening procedures for job placement,

admission to schools or training programs, or acceptance into government or military service.

Such private postings on the public Web are also becoming routine elements in employment

termination actions. The boundary between “public” and “private” keeps moving, and it

moves because of the ways we think about the information, and not because of the

information technologies themselves.

The GDPR and other data protection regulations require business leaders, directors, and

owners to make clear to customers and employees what data they collect and what they do

with it, which in turn implements the separation of that data into public and private data. As

an SSCP, you probably won’t make specific determinations as to whether certain kinds of

data are public or private, but you should be familiar with your organization’s privacy policies

and its procedures for carrying out its data protection responsibilities. Many of the

information security measures you will help implement, operate, and maintain are vital to

keeping the dividing line between public and private data clear and bright.

Confidentiality

Often thought of as “keeping secrets,” confidentiality is actually about sharing secrets.

Confidentiality is both a legal and ethical concept about privileged communications or

privileged information. Privileged information is information you have, own, or create, and

that you share with someone else with the agreement that they cannot share that knowledge

with anyone else without your consent, or without due process in law. You place your trust

and confidence in that other person’s adherence to that agreement. Relationships between

professionals and their clients, such as the doctor-patient or attorney-client ones, are prime

examples of this privilege in action. Except in very rare cases, courts cannot compel parties in

a privileged relationship to violate that privilege and disclose what was shared in confidence.

PRIVACY IS NOT CONFIDENTIALITY

As more and more headline-making data breaches occur, people are demanding greater

protection of personally identifiable information (PII) and other information about them as

individuals. Increasingly, this is driving governments and information security professionals to

see privacy as separate and distinct from confidentiality. While both involve keeping closehold, limited-distribution information safe from inadvertent disclosure, we’re beginning to

see that they may each require subtly different approaches to systems design, operation and

management to achieve.

Confidentiality refers to how much we can trust that the information we’re about to use to

make a decision has not been seen by unauthorized people. The term unauthorized people

generally includes anybody or any group of people who could learn something from our

confidential information, and then use that new knowledge in ways that would thwart our

plans to attain our objectives or cause us other harm.

Confidentiality needs dictate who can read specific information or files, or who can download

or copy them. This is very different from who can modify, create, or delete those files.

One way to think about this is that integrity violations change what we think we know;

confidentiality violations tell others what we think is our private knowledge.

Integrity

Integrity, in the most common sense of the word, means that something is whole and

complete, and that its parts are smoothly joined together. People with high personal integrity

are ones whose actions and words consistently demonstrate the same set of ethical

principles. You know that you can count on them and trust them to act both in ways they

have told you they would and in ways consistent with what they’ve done before.

Integrity for information systems has much the same meaning. Can we rely on the

information we have and trust in what it is telling us?

This attribute reflects two important decision-making needs:

First, is the information accurate? Have we gathered the right data, processed it in the right

ways, and dealt with errors, wild points, or odd elements of the data correctly so that we can

count on it as inputs to our processes? We also have to have trust and confidence in those

processes—do we know that our business logic that combined experience and data to

produce wisdom actually works correctly?

Next, has the information been tampered with, or have any of the intermediate steps in

processing from raw data to finished “decision support data” been tampered with? This

highlights our need to trust not only how we get data, and how we process it, but also how

we communicate that data, store it, and how we authorize and control changes to the data

and the business logic and software systems that process that data.

Integrity applies to three major elements of any information-centric set of processes: to the

people who run and use them, to the data that the people need to use, and to the systems or

tools that store, retrieve, manipulate, and share that data. We’ll look at all of these concepts

in greater depth in later chapters, but it’s important here to review what Chapter 1 said

about DIKW, or data, information, knowledge, and wisdom:

Data are the individual facts, observations, or elements of a measurement, such as a person’s

name or their residential address.

Information results when we process data in various ways; information is data plus

conclusions or inferences.

Knowledge is a set of broader, more general conclusions or principles that we’ve derived

from lots of information.

Wisdom is (arguably) the insightful application of knowledge; it is the “a-ha!” moment in

which we recognize a new and powerful insight that we can apply to solve problems with or

to take advantage of a new opportunity—or to resist the temptation to try!

You also saw in Chapter 1 that professional opinion in the IT and information systems world is

strongly divided about data versus D-I-K-W, with nearly equal numbers of people

holding that they are the same ideas, that they are different, and that the whole debate is

unnecessary. As an SSCP, you’ll be expected to combine experience, training, and the data

you’re observing from systems and people in real time to know whether an incident of

interest is about to become a security issue, whether or not your organization uses

knowledge management terminology like this. This is yet another example of just how many

potentially conflicting, fuzzy viewpoints exist in IT and information security.

Availability

Is the data there, when we need it, in a form we can use?

We make decisions based on information; whether that is new information we have gathered

(via our data acquisition systems) or knowledge and information we have in our memory, it’s

obvious that if the information is not where we need it, when we need it, we cannot make as

good a decision as we might need to:

The information might be in our files, but if we cannot retrieve it, organize it, and display it in

ways that inform the decision, then the information isn’t available.

If the information has been deleted, by accident, sabotage, or systems failure, then it’s not

available to inform the decision.

These might seem obvious, and they are. Key to availability requirements is that they specify

what information is needed; where it will need to be displayed, presented, or put in front of

the decision makers; and within what span of time the data is both available (displayed to the

decision makers) and meaningful. Yesterday’s data may not be what we need to make

today’s decision.

CIA IN REAL ESTATE DEVELOPMENT

Suppose you work in real estate, and you’ve come to realize that a particular area outside of

town is going to be a “path of progress” for future development. That stretch of land is in the

right place for others to build future housing areas, business locations, and entertainment

attractions; all it needs is the investment in roads and other infrastructure, and the

willingness of other investors to make those ideas become real projects. The land itself is

unused scrub land, not even suitable for raising crops or cattle; you can buy it for a hundredth

of what it might sell for once developers start to build on this path to progress.

Confidentiality: Note how your need for confidentiality about this changes with time. While

you’re buying up the land, you really don’t need or want competitors who might drive up the

prices on your prime choices of land. Once you’ve established your positions, however, you

need to attract the attention of other investors and of developers, who will use their money

and energy to make your dreams come true. This is an example of the time value of

confidentiality—nothing stays secret forever, but while it stays secret, it provides advantage.

Integrity: In that same “path of progress” scenario, you have to be able to check the accuracy

of the information you’ve been gathering from local landowners, financial institutions, and

business leaders in the community. You have to be able to tell whether one of those sources

is overly enthusiastic about the future and has exaggerated the potential for growth and

expansion in the area. Similarly, you cannot misrepresent or exaggerate that future potential

when you entice others to come and buy land from you to develop into housing or business

properties that they’ll sell on to other buyers.

Availability: You’ll need to be able to access recorded land titles and descriptions, and the

legal descriptions of the properties you’re thinking of buying and then holding for resale to

developers. The local government land registry still uses original paper documents and large

paper “plat” maps, and a title search (for information about the recorded owners of a piece

of land, and whether there are any liens recorded against it) can take a considerable amount

of time. If that time fits into your plans, the data you need is available; if you need to know

faster than the land registry can answer your queries, then it is not available.

Privacy vs. Security, or Privacy and Security?

It’s easy to trivialize this question by trotting out the formal definitions: privacy is freedom

from intrusion, and security is the protection of something or someone from loss, harm, or

injury, now or in the future. This reliance on the formal definitions alone hasn’t worked in the

past, and it’s doubtful that a logical debate will cool down the sometimes overly passionate

arguments that many people have on this topic.

Over the last 20 years, the increasing perception of the threat of terrorist attacks has brought

many people to think that strong privacy encourages terrorism and endangers the public and

our civilization. Strong privacy protections, these people claim, allow terrorists to “hide in

plain sight” and use the Internet and social media as their command, control,

communications, and intelligence systems. “If you’ve got nothing to hide,” these ubersecurity zealots ask, “why do you need any privacy?”

But is this privacy-versus-security dilemma real or imagined? Consider, for example, how

governments have long argued that private citizens have no need of encryption to protect

their information; yet without strong encryption, there would be no way to protect online

banking, electronic funds transfers, or electronic purchases from fraud. Traffic and security

CCTV and surveillance systems can help manage urban problems, dispatch first responders

more effectively, and even help identify and detain suspects wanted by the police. But the

same systems can easily be used by almost anyone to spy on one’s neighbors, know when a

family is not at home, or stalk a potential victim. The very systems we’ve paid for (with our

taxes) become part of the threat landscape we have to face!

We will not attempt to lay out all of this debate here. Much of it is also beyond the scope of

the SSCP exam. But as an SSCP, you need to be aware of this debate. More and more, we are

moving our private lives into the public spaces of social media and the Web; as we do this, we

keep shifting the balance between information that needs to be protected and that which

ought to be published or widely shared. At the technical level, the SSCP can help people and

organizations carry out the policy choices they’ve made; the SSCP can also advise and assist in

the formulation of privacy and security policies, and even help craft them, as they grow in

professional knowledge, skills, and abilities.

“IF YOU’VE GOT NOTHING TO HIDE…”

This is not a new debate. In ancient Greece, even the architecture of its homes and the layout

of the city streets helped make private spaces possible, as witnessed by the barbed criticism

of Socrates:

“For where men conceal their ways from one another in darkness rather than in light, there

no man will ever rightly gain either his due honor or office or the justice that is befitting.”

Socrates seems to argue for the transparent society, one in which every action, anywhere,

anytime, is visible to anyone in society.

Take a look at Greg Ferenstein’s “The Birth and Death of Privacy: 3,000 Years of History Told

Through 46 Images,” at https://medium.com/the-ferenstein-wire/the-birth-and-death-ofprivacy-3-000-years-of-history-in-50-images-614c26059e, to put this debate into context.

The problem seems to be that for most of history, this transparency has been in only one

direction: the powerful and wealthy, and their government officials, can see into everyone

else’s lives and activities, but the average citizen cannot see up into the doings of those

in power.

Who watches the watchers?

—Juvenal, Satire VI, lines 347–348, late first century/early second century AD.

Whether it’s the business of business, the functions of government, or the actions and

choices of individuals in our society, we can see that information is what makes everything

work. Information provides the context for our decisions; it’s the data about price and terms

that we negotiate about as buyers or sellers, and it’s the weather forecast that’s part of our

choice to have a picnic today at the beach. Three characteristics of information have long

been recognized as vital to our ability to make decisions about anything:

If it is publicly known, we must have confidence that everybody knows it or can know it; if it

is private to us or those we are working with, we need to trust that it stays private or

confidential.

The information we need must be reliable. It must be accurate enough to meet our needs and

come to us in ways we can trust. It must have integrity.

The information must be there when we need it. It must be available.

Those three attributes or characteristics—the confidentiality, integrity, and availability of the

information itself—reflect the needs we all have to be reasonably sure that we are making

well-informed decisions, when we have to make them, and that our competitors (or our

enemies!) cannot take undue or unfair advantage over us in the process. Information security

practitioners refer to this as the CIA of information security. Every information user needs

some CIA; for some purposes, you need a lot of it; for others, you can get by with more

uncertainty (or “less CIA”).

Over the last decade, security professionals and risk managers have also placed greater

emphasis on two other aspects of information security:

Virtually all online business depends upon each party being able to take action to fulfill

requests made of them by others; but in doing so, they must have confidence that the

requester will not subsequently deny that request. Change their mind and request a

correction (such as “would you cancel that last order please?”), yes; deny that they made the

order in the first place, no. Information must therefore be nonrepudiable, in order to protect

buyers and sellers, bankers and customers, or even doctors and patients.

Online business also depends upon trust: sellers trust that buyers are who they claim they

are, and have the legal right to commit to a purchase, while buyers must depend upon the

seller being whom they claim to be and having the right to sell the products in question. Each

must trust in the authority of the other party to enter into the agreement; the information

they exchange must have authenticity.

These add to our CIA Triad to produce the mnemonic CIANA. The growing incidence of

cyberattacks on public infrastructures is also raising the emphasis on safety, while similarly,

the massive data breaches seen in the last few years highlight the need for better privacy

protections to be in place. This leads others in the security profession to use CIANA+PS as the

umbrella label for security needs, attributes, or requirements at the big-picture, strategic

level.

Throughout this course we’ll use the acronym best suited to the context, as not all situations

call for emphasis on all seven attributes. The following sections illustrate this. (Note that the

absence of an attribute in the items below should not be taken to suggest that that situation

has no need for that information security characteristic.)

CIANA+PS Needs of Individuals

Each of us has a private life, which we may share with family, friends, or loved ones. We

expect a reasonable degree of security in that private life. As taxpayers and law-abiding

members of our societies, whether we realize it or not, we have agreed to a social compact—

a contract of sorts between each of us as an individual and the society as a whole. We fulfill

our duties to society by obeying the laws, and society keeps us safe from harm. Society

defends us against other nations that want to conquer or destroy us; society protects us

against criminals; and society protects us against the prospects of choking on our own

garbage, sewage, or exhaust. In English, safety and security are two different words for two

concepts we usually keep separate; in Spanish, one word, seguridad, embraces both ideas

equally.

People may be people, but they can take on many different roles in a society. For example:

Government officials or officers of the government have been appointed special authorities

and responsibilities in law and act in the name of the government and the people of their

jurisdiction. They must conduct their jobs in accordance with applicable laws and regulations,

as well as be held to standards of conduct set by the government.

Licensed professionals, such as doctors, engineers, lawyers, or the clergy or priesthood, are

recognized (issued a license) by the government to provide services to people and

organizations within the bounds of their profession. Those professions set standards for their

practice and their conduct, which may or may not be reinforced by applicable law.

Corporate officers and officials, business owners, and other key people in the operation of a

business (or even a nonprofit organization) are responsible by law and in practice for due care

and due diligence in the conduct of their business.

Celebrities, such as entertainment or sports personalities, are typically private people whose

choice of work or avocation has made them famous. Their particular business may be selfregulating, such as when Major League Baseball sanctions (punishes) a player for misusing

performance-enhancing substances.

Journalists, reporters, and those in the news and information media are believed to be part

of keeping society informed and thus should be held to standards of objectivity, honesty, and

fairness. Those standards may be enforced by their employers or the owners of the news

media that they work for.

Whistleblowers are individuals who see something that they believe is wrong, and then turn

to people outside of their own context to try to find relief, assistance, or intervention.

Historically, most whistleblowers have been responsible for bringing public pressure to bear

to fix major workplace safety issues, child labor abuses, graft and corruption, or damage to

the environment, in circumstances where the responsible parties could harass, fire, or

sometimes even physically assault or kill the whistleblower.

Private citizens are, so to speak, anybody who doesn’t fall into any of those categories.

Private citizens are subject to law, of course, and to the commonly accepted ethical and

behavioral standards of their communities.

It’s not hard to see how societies benefit as a whole when the sum total of law, ethics, and

information security practices provide the right mix of CIA for each of these kinds of

individuals.

Private Business’s Need for CIANA+PS

The fundamental fact of business life is competition. Competition dictates that decisions be

made in timely ways, with the most reliable information available at the time. It also means

that even the consideration of alternatives—the decisions the business is thinking about

making—need to be kept out of the eyes and ears of potential competitors. Ethical concepts

like fair play dictate that each business be able to choose where and when it will make its

decisions known to their marketplaces, to the general public, and to its competitors.

As business use of robotics, autonomous devices, and Internet of Things capabilities grows, so

too does the potential of unintended injury or property damage, if safety needs have not

been properly considered.

Government’s Need for CIANA+PS

Government agencies and officers of the government have comparable needs for availability

and integrity of the information that they use in making decisions. As for confidentiality,

however, government faces several unique needs.

First, government does have a responsibility to its citizens; as it internally deliberates upon a

decision, it needs to do so confidentially to avoid sending inappropriate signals to businesses,

the markets, and the citizens at large. Governments are made up of the people who serve in

them, and those people do need reasonable time in which to look at all sides of complex

issues. One example of this is when government is considering new contracts with businesses

to supply goods and services that government needs. As government contracts officers

evaluate one bidder’s proposal, it would be inappropriate and unfair to disclose the strengths

and weaknesses of that proposal to competitors, who might then (unfairly!) modify their own

proposals.

The law enforcement duties of government, for example, may also dictate circumstances in

which it is inappropriate or downright dangerous to let the identity of a suspect or a key

witness be made public knowledge.

Many nations consider that the ultimate role of government is to ensure public safety. Much

work needs to be done in this regard, in almost every country.

The Modern Military’s Need for CIA

Military needs for confidentiality of information present an interesting contrast.

Deterrence—the strategy of making your opponents fear the consequences of attacking you,

and so leading them to choose other courses of action—depends on your adversary having a

good idea of just what your capabilities are and believing that you’ll survive their attack and

be able to deal a devastating blow to them regardless. Yet you cannot let them learn too

much, or they may find vulnerabilities in your systems and strategies that they can exploit.

Information integrity and availability are also crucial to the modern military’s decision

making. The cruise missile attack on the offices of the Chinese Embassy in Belgrade,

Yugoslavia, during the May 1999 NATO war against the Yugoslavian government illustrates

this. NATO and USAF officials confirmed that the cruise missile went to the right target and

flew in the right window on the right floor to destroy the Yugoslavian government office that

was located there—except, they say, they used outdated information and didn’t realize that

the building had been rented out to the Chinese Embassy much earlier. Whether this was a

case of bad data availability in action—right place, wrong tenant at wrong time—or whether

there was some other secret targeting strategy in action depends on which Internet

speculations you wish to follow.

Do Societies Need CIANA+PS?

Whether or not a society is a functioning democracy, most Western governments and their

citizens believe that the people who live in a country are responsible for the decisions that

their government makes and carries out in their names. The West holds the citizens of other

countries responsible for what they let their governments do; so, too, do the enemies of

Western societies hold the average citizens of those societies responsible.

Just as with due care and due diligence, citizens cannot meet those responsibilities if they are

not able to rely on the information that they use when they make decisions. Think about the

kind of decisions you can make as a citizen:

Which candidates do I vote for when I go to the polls on Election Day? Which party has my

best interests at heart?

Is the local redevelopment agency working to make our city, town, or region better for all of

us, or only to help developers make profits at the taxpayers’ expense?

Does the local water reclamation board keep our drinking water clean and safe?

Do the police work effectively to keep crime under control? Are they understaffed or just

badly managed?

Voters need information about these and many other issues if they are going to be able to

trust that their government, at all levels, is doing what they need done.

Prior to the Internet, many societies kept their citizens, voters, investors, and others

informed by means of what were called the newspapers of record. Sometimes this term

referred to newspapers published by the government (such as the Moscow Times during the

Soviet era); these were easily criticized for being little more than propaganda outlets.

Privately owned newspapers such as The New York Times, Le Figaro, and the Times of London

developed reputations in the marketplace for separating their reporting of verifiable facts

about newsworthy events from their editorial opinions and explanations of the meanings

behind those facts. With these newspapers of record, a society could trust that the average

citizen knew enough about events and issues to be able to place faith and confidence in the

government, or to vote the government out at the next election as the issues might demand.

Radio, and then television, gave us further broadcasting of the news—as with the

newspapers, the same story would be heard, seen, or read by larger and larger audiences.

With multiple, competing newspapers, TV, and radio broadcasters, it became harder for one

news outlet to outright lie in its presentation of a news story. (It’s always been easy to ignore

a story.)

Today’s analytics-driven media and the shift to “infotainment” has seen narrowcasting

replace broadcasting in many news marketplaces. Machine learning algorithms watch your

individual search history and determine the news stories you might be interested in—and

quite often don’t bother you with stories the algorithms think you are not interested in. This

makes it much more difficult for people who see a need for change to get their message

across; it also makes it much easier to suppress the news a whistleblower might be trying to

make public.

Other current issues, such as the outcry about “fake news,” should raise our awareness of

how nations and societies need to be able to rely on readily available news and information

as they make their daily decisions. It’s beyond the scope of the SSCP exam to tackle this

dilemma, but as an SSCP, you may be uniquely positioned to help solve it.

Training and Educating Everybody

“The people need to know” is more than just “We need a free press.” People in all walks of

life need to know more about how their use of information depends on a healthy dose of CIA

and how they have both the ability and responsibility to help keep it that way.

You’ve seen by now that whether we’re talking about a business’s leaders and owners, its

workers, its customers, or just the individual citizens and members of a society, everybody

needs to understand what CIA means to them as they make decisions and take actions

throughout their lives. As an SSCP, you have a significant opportunity to help foster this

learning, whether as part of your assigned job or as a member of the profession and the

communities you’re a part of.

In subsequent chapters, we’ll look more closely at how the SSCP plays a vital role in keeping

their business information systems safe, secure, and resilient.

SSCPs and Professional Ethics

“As an SSCP” is a phrase we’ve used a lot so far. We’ve used it two different ways: to talk about

the opportunities facing you, and to talk about what you will have to know as you rise up to

meet those opportunities.

There is a third way we need to use that phrase, and perhaps it’s the most important of them

all. Think about yourself as a Systems Security Certified Professional in terms of the “three

dues.” What does it mean to you to live up to the responsibilities of due care and due diligence,

and thus ensure that you meet or exceed the requirements of due process?

(ISC)2 provides us a Code of Ethics, and to be an SSCP you agree to abide by it. It is short and

simple. It starts with a preamble, which we quote in its entirety:

The safety and welfare of society and the common good, duty to our principals, and to each

other, requires that we adhere, and be seen to adhere, to the highest ethical standards of

behavior.

Therefore, strict adherence to this Code is a condition of certification.

Let’s operationalize that preamble—take it apart, step by step, and see what it really asks of us:

1. Safety and welfare of society: Allowing information systems to come to harm because of

the failure of their security systems or controls can lead to damage to property, or injury

or death of people who were depending on those systems operating correctly.

2. The common good: All of us benefit when our critical infrastructures, providing common

services that we all depend on, work correctly and reliably.

3. Duty to our principals: Our duties to those we regard as leaders, rulers, or our

supervisors in any capacity.

4. Our duty to each other: To our fellow SSCPs, others in our profession, and to others in

our neighborhood and society at large.

5. Adhere and be seen to adhere to: Behave correctly and set the example for others to

follow. Be visible in performing our job ethically (in adherence with this Code) so that

others can have confidence in us as a professional and learn from our example.

The code is equally short, containing four canons or principles to abide by:

Protect society, the common good, necessary public trust and confidence, and the

infrastructure.

Act honorably, honestly, justly, responsibly, and legally.

Provide diligent and competent service to principals.

Advance and protect the profession.

The canons do more than just restate the preamble’s two points. They show us how to adhere

to the preamble. We must take action to protect what we value; that action should be done

with honor, honesty, and justice as our guide. Due care and due diligence are what we owe to

those we work for (including the customers of the businesses that employ us).

The final canon addresses our continued responsibility to grow as a professional. We are on a

never-ending journey of learning and discovery; each day brings an opportunity to make the

profession of information security stronger and more effective. We as SSCPs are members of a

worldwide community of practice—the informal grouping of people concerned with the safety,

security, and reliability of information systems and the information infrastructures of our

modern world.

In ancient history, there were only three professions—those of medicine, the military, and the

clergy. Each had in its own way the power of life and death of individuals or societies in its

hands. Each as a result had a significant burden to be the best at fulfilling the duties of that

profession. Individuals felt the calling to fulfill a sense of duty and service, to something larger

than themselves, and responded to that calling by becoming a member of a profession.

This, too, is part of being an SSCP.

EXERCISE 2.1

NUCLEAR MEDICINE AND CIA

In 1982, Atomic Energy of Canada Limited (AECL) began marketing a new model of its Therac

line of X-ray treatment machines to hospitals and clinics in Canada, the United States, and Latin

American countries. Previous models had used manually set controls and mechanical safety

interlocks to prevent patients or staff from being exposed to damaging or lethal radiation

levels. The new Therac-25 used a minicomputer and software to do all of these functions. But

inadequate software test procedures, and delays in integrating the software and the X-ray

control systems, meant that the Therac-25 went to market without rigorously demonstrating

these new computer-controlled safety features worked correctly. In clinical use in the U.S.,

Canada, and other countries, patients were being killed or seriously injured by the machine;

AECL responded slowly if at all to requests for help from clinicians and field support staff.

Finally, the problems became so severe that the system was withdrawn from the market.

Wikipedia provides a good place to get additional information on this famous case study, which

you can see at https://en.wikipedia.org/wiki/Therac-25. This is a well-studied case, and the

Web has many rich information sources, analyses, and debates that you can (and

should) learn from. Dr Nancy Leveson from MIT offers an insightful retrospective, asking what

progress we’ve made (or failed to make) in this regard;

see https://www.computer.org/csdl/magazine/co/2017/11/mco2017110008/13rRUxAStVR for

further details.

Without going into this case in depth, how would you relate the basic requirements for

information security (confidentiality, integrity, availability) to what happened? Does this case

demonstrate that safety and reliability, and information security, are two sides of the same

coin? Why or why not?

If you had been working at AECL as an information security professional, what would have been

your ethical responsibilities, and to whom would you have owed them?

Summary

Our Internet-enabled, e-commerce-driven world simply will not work without trustworthy,

reliable information exchanges. Trust and reliability, as we’ve seen, stem from the right mix

of confidentiality, privacy, and integrity in the ways we gather, process, use and share

information. It’s also clear that if reliable, trustworthy information isn’t where we need it,

when we need it, we put the decisions we’re about to make at risk; without availability, our

safe and secure information isn’t useful; it’s not reliable. These needs for trustworthy,

reliable information and information systems are equally important to governments and

private businesses; and they are vitally important to each of us as individuals, whether as

citizens or as consumers.

These fundamental aspects of information security—the CIA triad plus privacy, nonrepudiation, authenticity, and safety—tie directly into our responsibilities in law and in

ethics as information systems security professionals. As SSCPs, we have many

opportunities to help our employers, our clients, and our society achieve the right mix of

information security capabilities and practices.

From here, we move on to consider risk—what it is and how to manage and mitigate it, and

why it’s the central theme as we plan to defend our information from all threats.

Essay Writing Service Features

Our Experience

No matter how complex your assignment is, we can find the right professional for your specific task. Achiever Papers is an essay writing company that hires only the smartest minds to help you with your projects. Our expertise allows us to provide students with high-quality academic writing, editing & proofreading services.

Free Features

Free revision policy

$10Free bibliography & reference

$8Free title page

$8Free formatting

$8How Our Dissertation Writing Service Works

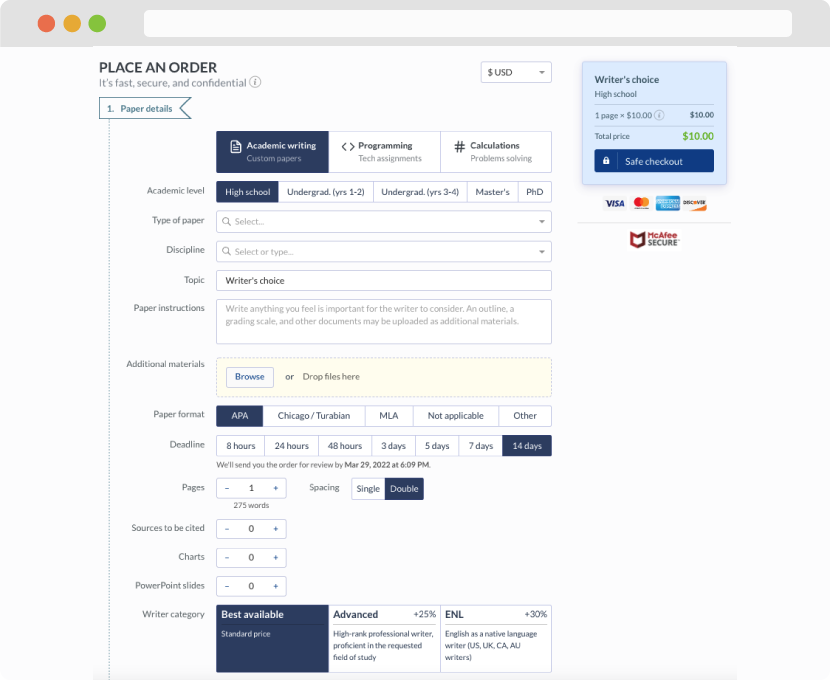

First, you will need to complete an order form. It's not difficult but, if anything is unclear, you may always chat with us so that we can guide you through it. On the order form, you will need to include some basic information concerning your order: subject, topic, number of pages, etc. We also encourage our clients to upload any relevant information or sources that will help.

Complete the order form

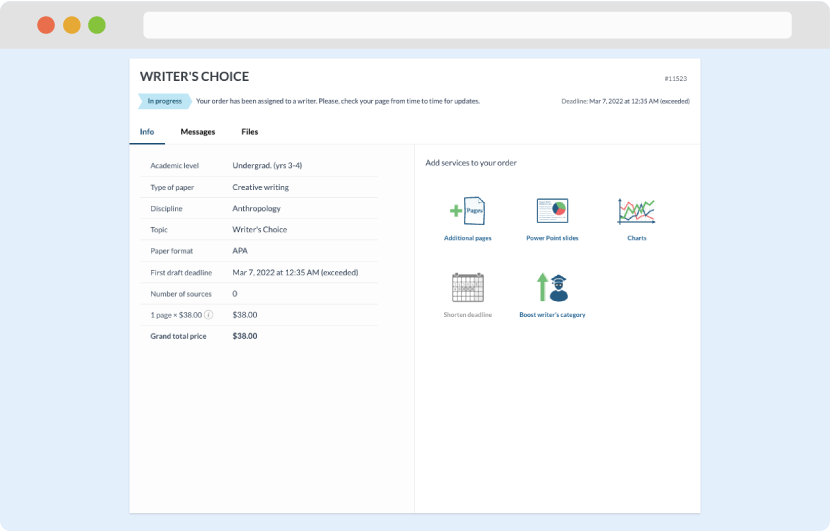

Once we have all the information and instructions that we need, we select the most suitable writer for your assignment. While everything seems to be clear, the writer, who has complete knowledge of the subject, may need clarification from you. It is at that point that you would receive a call or email from us.

Writer’s assignment

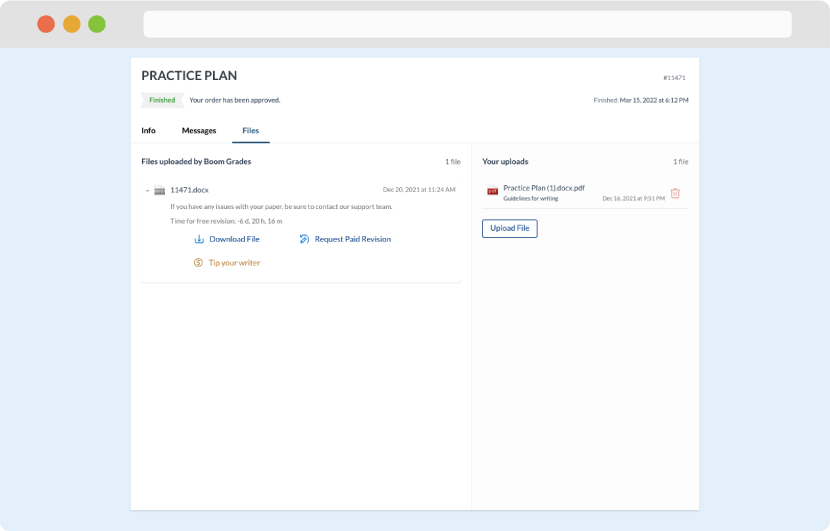

As soon as the writer has finished, it will be delivered both to the website and to your email address so that you will not miss it. If your deadline is close at hand, we will place a call to you to make sure that you receive the paper on time.

Completing the order and download