Assume that you are leading a staff development meeting on regulation for nursing practice at your healthcare organization or agency. Review the NCSBN and ANA websites to prepare for your presentation.

Regulation for Nursing Practice Staff Development Meeting

Nursing is a very highly regulated profession. There are over 100 boards of nursing and national nursing associations throughout the United States and its territories. Their existence helps regulate, inform, and promote the nursing profession. With such numbers, it can be difficult to distinguish between BONs and nursing associations, and overwhelming to consider various benefits and options offered by each.

Both boards of nursing and national nursing associations have significant impacts on the nurse practitioner profession and scope of practice. Understanding these differences helps lend credence to your expertise as a professional. In this Assignment, you will practice the application of such expertise by communicating a comparison of boards of nursing and professional nurse associations. You will also share an analysis of your state board of nursing.

Resources

Be sure to review the

Learning Resources

before completing this activity.

Click the weekly resources link to access the resources.

WEEKLY RESOURCES

Learning Resources

Required Readings

Short, N. M. (2022). Milstead’s health policy and politics: A nurse’s guide (7th ed.). Jones & Bartlett Learning.

Chapter 7, “Government Response: Regulation” (pp. 147–173)

American Nurses Association. (n.d.). ANA enterpriseLinks to an external site.. Retrieved September 20, 2018, from

http://www.nursingworld.org

Bosse, J., Simmonds, K., Hanson, C., Pulcini, J., Dunphy, L., Vanhook, P., & Poghosyan, L. (2017). Position statement: Full practice authority for advanced practice registered nurses is necessary to transform primary careLinks to an external site.. Nursing Outlook, 65(6), 761–765.

Halm, M. A. (2018). Evaluating the impact of EBP education: Development of a modified Fresno test for acute care nursing Download Evaluating the impact of EBP education: Development of a modified Fresno test for acute care nursing. Worldviews on Evidence-Based Nursing, 15(4), 272–280. doi:10.1111/wvn.12291

National Council of State Boards of Nursing (NCSBN)Links to an external site.. (n.d.). Retrieved September 20, 2018, from

https://www.ncsbn.org/index.htm

Neff, D. F., Yoon, S. H., Steiner, R. L., Bumbach, M. D., Everhart, D., & Harman J. S. (2018). The impact of nurse practitioner regulations on population access to careLinks to an external site.. Nursing Outlook, 66(4), 379–385.

Peterson, C., Adams, S. A., & DeMuro, P. R. (2015). mHealth: Don’t forget all the stakeholders in the business caseLinks to an external site.. Medicine 2.0, 4(2), e4.

Required Media

Walden University, LLC. (Producer). (2018). The Regulatory Process [Video file]. Baltimore, MD: Author.

Walden University, LLC. (Producer). (2018). Healthcare economics and financing [Video file]. Baltimore, MD: Author.

Walden University, LLC. (Producer). (2018). Quality improvement and safety [Video file]. Baltimore, MD: Author.

To Prepare:

Assume that you are leading a staff development meeting on regulation for nursing practice at your healthcare organization or agency.

Review the NCSBN and ANA websites to prepare for your presentation.

The Assignment: (8- to 9-slide PowerPoint presentation)

Develop a 8- to 9-slide PowerPoint Presentation that addresses the following:

Describe the differences between a board of nursing and a professional nurse association.

Describe the board for your specific region/area.

Who is on the board?

How does one become a member of the board?

Describe at least one state regulation related to general nurse scope of practice.

How does this regulation influence the nurse’s role?

How does this regulation influence delivery, cost, and access to healthcare?

If a patient is from another culture, how would this regulation impact the nurse’s care/education?

Describe at least one state regulation related to Advanced Practice Registered Nurses (APRNs).

How does this regulation influence the nurse’s role?

How does this regulation influence delivery, cost, and access to healthcare?

Has there been any change to the regulation within the past 5 years? Explain.

Include Speaker Notes on Each Slide (except on the title page and reference page)

By Day 7 of Week 6

Submit your Regulation for Nursing Practice Staff Development Meeting Presentation.

submission information

Before submitting your final assignment, you can check your draft for authenticity. To check your draft, access the Turnitin Drafts from the Start Here area.

To submit your completed assignment, save your Assignment as WK6Assgn+LastName+Firstinitial

Then, click on Start Assignment near the top of the page.

Next, click on Upload File and select Submit Assignment for review.

image0.wmf

image1.wmf

image2.wmf

image3.wmf

Original Article

Evaluating the Impact of EBP Education:

Development of a Modified Fresno Test for

Acute Care Nursing

Margo A. Halm, PhD, RN, NEA-BC

Keywords

modified Fresno,

EBP education/

competencies,

acute care nursing,

novice-to-expert,

psychometrics

ABSTRACT

Background: Proficiency in evidence-based practice (EBP) is essential for relevant research find-

ings to be integrated into clinical care when congruent with patient preferences. Few valid and

reliable tools are available to evaluate the effectiveness of educational programs in advancing

EBP attitudes, knowledge, skills, or behaviors, and ongoing competency. The Fresno test is one

objective method to evaluate EBP knowledge and skills; however, the original and modified

versions were validated with family physicians, physical therapists, and speech and language

therapists.

Aims: To adapt the Modified Fresno-Acute Care Nursing test and develop a psychometrically

sound tool for use in academic and practice settings.

Methods: In Phase 1, modified Fresno (Tilson, 2010) items were adapted for acute care nursing.

In Phase 2, content validity was established with an expert panel. Content validity indices (I-CVI)

ranged from .75 to 1.0. Scale CVI was .95%. A cross-sectional convenience sample of acute care

nurses (n = 90) in novice, master, and expert cohorts completed the Modified Fresno-Acute Care

Nursing test administered electronically via SurveyMonkey.

Findings: Total scores were significantly different between training levels (p < .0001). Novice nurses scored significantly lower than master or expert nurses, but differences were not found between the latter cohorts. Total score reliability was acceptable: (interrater [ICC (2, 1)]) = .88. Cronbach’s alpha was 0.70. Psychometric properties of most modified items were satis- factory; however, six require further revision and testing to meet acceptable standards.

Linking Evidence to Action: The Modified Fresno-Acute Care Nursing test is a 14-item test for

objectively assessing EBP knowledge and skills of acute care nurses. While preliminary psycho-

metric properties for this new EBP knowledge measure for acute care nursing are promising,

further validation of some of the items and scoring rubric is needed.

INTRODUCTION

Over a decade ago, the Institute of Medicine (Institute of

Medicine [IOM], 2001) recognized evidence-based practice

EBP as a key solution to ensure care delivered has the high-

est clinical effectiveness known to science. To reach the IOM’s

(2007, p. ix) 2020 goal that “90% of clinical decisions will be

supported by accurate, timely and up-to-date clinical informa-

tion that reflects the best available evidence,” nurses need EBP

competencies to guarantee that relevant research findings are

integrated into clinical situations when congruent with patient

preferences (Melnyk, Gallagher-Ford, Long, Long, & Fineout-

Overholt, 2014).

BACKGROUND

A recent evidence synthesis reported 10 studies evaluating

the effectiveness of educational interventions in building EBP

attitudes, knowledge, skills, and behaviors of nurses (Halm,

2014). Interventions were primarily workshop or immersion

programs, but seminars, journal clubs, and EBP and research

councils were also evaluated via: (a) self-reported EBP attitude,

knowledge, and behavior (Chang et al., 2013; Dizon, Somers, &

Kumar, 2012; Edward & Mills, 2013; Leung, Trevana, & Waters,

2014); (b) PICO questions and activity diaries (Dizon et al.,

2012); (c) Edmonton Research Orientation (Gardner, Smyth,

Renison, Cann, & Vicary, 2012) and Clinical Effectiveness or

EBP Questionnaire (Sciarra, 2011; Toole, Stichler, Ecoff, &

Kath, 2013; White-Williams et al., 2013); and (d) interviews and

focus groups to identify qualitative themes about nurses’ expe-

rience in EBP programs (Balakas, Sparks, Steurer, & Bryant,

2013; Nesbitt, 2013; Wendler, Samuelson, Taft, & Eldridge,

2011). Varied measurement across studies limited estimation

of the effectiveness of EBP training (Dizon et al., 2012).

In a systematic review, Shaneyfelt et al. (2006) rec-

ommended valid and responsive methods to evaluate the

programmatic impact of EBP education and progression in

272

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

CE

http://crossmark.crossref.org/dialog/?doi=10.1111%2Fwvn.12291&domain=pdf&date_stamp=2018-05-14

EBP competencies. As self-report is extremely biased (Lai

& Teng, 2011; Shaneyfelt et al., 2006); objective knowledge

tests that incorporate multiple-choice or short answers with

case-based decision-making like the Berlin Questionnaire

(Fritsche, Greenhalgh, Falck-Ytter, Neumayer, & Kunz, 2002)

or Fresno test were recommended to evaluate EBP knowledge

and skills (Shaneyfelt et al., 2006). The Fresno test, a valid and

reliable method to evaluate EBP knowledge and skills using

a standardized scoring rubric, has been validated with family

physicians (Ramos et al., 2003), physical therapy (Miller,

Cummings, & Tomlinson, 2013; Tilson, 2010), and speech

language (Spek, de Wolf, van Dijk, & Lucas, 2012).

SPECIFIC AIMS

As objective methods for assessing EBP knowledge and skills

of nurses are lacking, the specific aim of this study was to fill a

measurement gap by adapting the modified Fresno test (Tilson,

2010) for acute care nursing. Only with consistent use of psy-

chometrically sound methods can useful evidence be generated

about the effectiveness of various EBP teaching strategies—

new knowledge that can direct effective educational and pro-

fessional development programs for students and practicing

nurses. The specific research question was: Will an adapted

Fresno test discriminate EBP knowledge and skills between

novice, master, and expert acute care nurses?

METHODS

Research Design

A cross-sectional cohort design was used to replicate Tilson’s

(2010) modified Fresno test (Figure 1).

Phase I: Test adaptation. New scenarios on acute care nurs-

ing were developed for items #1–8 that remained unchanged.

Item #9 (clinical expertise) was retained despite removal due to

poor psychometric performance by Tilson (2010). Items #10–13

were modified for acute care although the EBP focus was un-

changed. Item #14 was modified to the best design for studying

the meaning of experience.

Phase 2: Content validity. Content validity was established

with a panel of four masters and doctorally prepared acute care

EBP experts from practice and academic settings. In round

one, panelists rated each item and rubric for clarity, impor-

tance, and comprehensiveness on a 5-point Likert scale. Pan-

elists provided feedback on whether items should be retained,

revised, dropped, or added (Polit & Beck, 2012). In round two,

items #10 (mathematical calculations for sensitivity, positive

predictive value) and #11 (relative and absolute risk reduction)

were replaced because the panel did not believe acute care

nurses would be expected to make these calculations without

a resource. These items were replaced (and reviewed) with

assessing tool reliability/validity and applying qualitative find-

ings. The scoring rubric (Figure S1) was modified to reflect item

alterations and ensure scoring consistency across subjects and

raters (Jonsson & Svingby, 2007). With a single overall score,

Figure 1. Study flowchart.

a passing score was defined as >50% of available points for in-

dividual items (Tilson, 2010). This passing score was set lower

than that defined as “mastery of material” (Ramos, Schafer, &

Tracz, 2003) to reduce the risk of a floor effect with novices.

A content validity index (I-CVI) was calculated for individ-

ual items by dividing the number of 4–5 ratings by the number

of experts. Mean (M) item ratings were 4.54 (clarity), 4.82 (im-

portance), and 4.75 (comprehensiveness). Only item 12 had an

I-CVI value <0.78 because the panel rated interpreting con-

fidence intervals lower on importance for acute care nurses.

The scale CVI of .95% was calculated by averaging I-CVIs,

exceeding acceptable standards of >.90 (Polit & Beck, 2007;

Table 1).

Phase 3: Validation of modified Fresno. After Institu-

tional Review Board exemption was obtained, invitations were

emailed to three cohorts: (a) novice nurses (less than 2 years of

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

273

Original Article

Table 1. Modified Fresno Test Items (n = 90)

Scores

Item/EBP step or

component Topic

Content

validity index

(I-CVI)

Possible

score

Passing

score

Novices

(n= 30)

M (SD)

Masters

(n= 30)

M (SD)

Experts

(n= 30)

M (SD) p value*

1 INQUIRE PICO question .92 0–24 >12 13.73 (7.37) 19.47 (3.71) 18.13 (4.55) .001 (N-M, N-E)

2 ACQUIRE Sources 1.0 0–24 >12 15.03 (6.53) 20.33 (5.09) 17.53 (6.05) .004 (N-M)

3 APPRAISE Treatment

design

1.0 0–24 >12 5.80 (6.77) 10.50 (6.90) 11.90 (5.87) .001 (N-M, N-E)

4 ACQUIRE Search .92 0–24 >12 13.93 (5.06) 16.53 (4.69) 15.10 (4.69) .18

5 APPRAISE Relevance .92 0–24 >12 7.47 (6.31) 9.77 (6.83) 12.03 (6.72) .03 (N-E)

6 APPRAISE Validity .92 0–24 >12 7.30 (6.75) 10.67 (7.77) 10.23 (7.38) .16

7 APPRAISE Significance 1.0 0–24 >12 3.40 (3.94) 9.97 (8.18) 7.70 (7.03) .001 (N-M, N-E)

8 PATIENT

PREFERENCES

Patient

preference

1.0 0–16 >8 6.13 (4.36) 8.20 (5.59) 9.00 (4.95) .08

9 CLINICAL

EXPERTISE

Clinical

expertise

1.0 0–8 >4 4.80 (3.04) 5.60 (2.49) 6.40 (2.49) .08

10 APPLY Tools .92 0–12 >6 3.90 (4.18) 8.50 (3.35) 7.00 (4.12) .001 (N-M, N-E)

11 APPLY Qualitative 1.0 0–16 >8 12.13 (4.75) 10.93 (5.35) 12.53 (6.19) .50

12 APPRAISE Confidence

intervals

.75 0–4 >2 .13 (.73) .40 (1.22) 1.07 (1.80) .02 (N-E)

13 APPRAISE Design

diagnosis

1.0 0–4 >2 .27 (1.01) .27 (1.01) .27 (1.01) 1.00

14 APPRAISE Design

meaning

1.0 0–4 >2 2.13 (2.03) 3.73 (1.01) 3.87 (.73) .001 (N-M, N-E)

Total scores .95 Scale CVI 0–232 >116 96.17 (26.14) 134.87 (30.76) 132.77 (28.94) .001 (N-M, N-E)

*Scheffe post-hoc analysis: N= Novices; M= Masters; E= Experts.

experience after graduation from a bachelorette program) from

three U.S. Magnet hospitals; (b) master nurses (master’s pre-

pared) recruited via the National Association of Clinical Nurse

Specialists listserv; and (c) expert nurses (doctorally prepared)

recruited via the American Nurses Credentialing Corporation’s

Magnet program director’s listserv and faculty at Bethel Uni-

versity (St. Paul, MN, USA). Nurses in the expert cohort self-

affirmed their EBP expertise and teaching experience. Up to

1 hr (in one sitting) was allowed to complete the test with no

external resources; only notepaper and calculators were per-

mitted. Reminder e-mails were sent at 2 and 4 weeks. A $10

gift certificate incentive was offered upon completion. Some

participants did not answer all the items on the exam; these

participants were not included in the sample for each cohort.

Only participants who had a complete exam were included in

the analysis. Data were collected in 2015.

Two doctorally prepared nurses with expertise teaching EBP

served as raters after an orientation to the test items and scor-

ing rubric. Raters practiced scoring three pilot tests from the

three cohorts and resolved discrepancies that could threaten in-

terrater reliability (IRR; e.g., halo effect, leniency or stringency,

central tendency errors; Castorr et al., 1990; before scoring

commenced. A midway refresher session allowed raters to re-

view scores, reducing the threat of rater drift (Castorr et al.,

1990). Data were analyzed with SPSS Version 23.0 (IBM Corp.,

Armonk, NY, USA).

RESULTS

Descriptive Statistics

The total sample of 90 nurses included cohort (a) new grad-

uates (n = 30); (b) master’s prepared CNSs (n = 30); and

(c) doctorally prepared nurses (n = 30). Seventy-six percent

completed the test within 60 min (83% novices, 70% mas-

ters, 73% experts). Mean min for test completion were 56.43

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

274

Development of a Modifi ed Fresno Test for Acute Care Nursing

Table 2. Psychometric Properties of Individual Items (n = 90)

% Passed by cohort

Item # Topic ICC IDI ITC

All

(n= 90)

Novices

(n= 30)

Masters

(n= 30)

Experts

(n= 30) χ2 p-value

1 PICO question .78 .43 .53 85.6 63.3 100.0 93.3 18.52 .0001

2 Sources .78 .35 .53 84.4 73.3 93.3 86.7 4.74 .09

3 Treatment design .86 .61 .56 44.4 26.7 50.0 56.7 6.03 .05

4 Search .72 .26 .48 80.0 76.7 86.7 76.7 1.25 .54

5 Relevance .48 .65 .63 35.6 26.7 33.3 46.7 2.72 .26

6 Validity .47 .43 .50 32.2 20.0 43.3 33.3 3.76 .15

7 Significance .74 .52 .57 26.7 6.7 40.0 33.3 9.55 .01

8 Patient

preference

.55 .52 .39 52.2 36.7 50.0 70.0 6.77 .03

9 Clinical expertise .23 .22 .40 88.9 80.0 93.3 93.3 3.60 .17

10 Tools .76 .74 .68 68.9 40.0 90.0 76.7 18.77 <.0001

11 Qualitative .68 .17 .31 88.9 93.3 90.0 83.3 1.58 .46

12 Confidence

intervals

.90 .04 .12 13.3 3.3 10.0 26.7 7.50 .02

13 Design diagnosis .61 .13 .12 6.7 6.7 6.7 6.7 .00 1.0000

14 Design meaning .89 .35 .37 81.1 53.3 93.3 96.7 22.77 <.0001

Total score .88 N/A N/A .0001

(standard deviation [SD] 38.21) for novices; 57.20 (SD 42.54)

for masters; and 43.21 (SD 26.33) for experts.

Reliability Statistics

IRR was calculated using intraclass correlation coefficients

(ICC) for total score and individual items (Table 2). Total score

reliability was high at .88. Of the 14 items, 3 had excellent

reliability (>.80), 7 had moderate reliability (.60–.79), and 4

had questionable reliability (<.60). Items with questionable

IRR focused on relevance (#5), validity (#6), patient preference

(#8), and clinical expertise (#9). A Cronbach’s alpha coefficient

of .70 was obtained for internal consistency of the modified

exam.

Item discrimination index (IDI) was calculated for each item

by separating total scores into quartiles and subtracting the pro-

portion of nurses in the bottom quartile who passed that item

(>50% points per item was passing) from the proportion in the

top quartile who passed the same item. The 50% threshold has

been defined as “mastery of material” (Ramos et al., 2003) and

used in similar validation studies (Tilson, 2010). IDI ranges

from –1.0 to 1.0, representing the difference in passing rate

between nurses with high (top 25%) and low (bottom 25%)

overall scores. Eleven of the 14 items had acceptable IDIs >.2

(Table 2). Correlation between item and total score and cor-

rected item-total correlation (ITC) was assessed using Pearson

correlation coefficients. Twelve of the 14 items had acceptable

ITCs >.3 (Table 2). Low IDI and ITC items focused on con-

fidence intervals (#12) and design for diagnostic tests (#13).

Qualitative findings (#11) also had a low IDI.

Total Score Analysis

No floor or ceiling effect was apparent, indicating the test is ap-

plicable from novice to expert (Figure 2). As shown in Table 1,

total mean scores for novices (M 96.17, SD 26.14) revealed

that a passing score of 116 was not achieved in this cohort as

with the master (M 134.87, SD 30.76) and expert (M 132.71,

SD 28.94) cohorts. One-way analysis of variance (ANOVA)

demonstrated that overall mean scores were significantly dif-

ferent, F (2, 89) = 17.58, p < .0001, between cohorts. A post-

hoc Scheffe comparison showed novice total mean scores (M

96.17, SD 26.14) differed significantly from master (M 134.87,

SD 30.07, d = 1.36) and expert nurses (M 132.77, SD 28.94,

d = 1.33). Cohen’s d is an effect size measure that is used

to explain the standardized difference between two means,

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

275

Original Article

Figure 2. Box plots for sum scores.

commonly reported with ANOVAs or t tests. There were no

significant differences between the master and expert cohorts.

Item Score Comparison

Post-hoc Scheffe analysis also revealed significant cohort dif-

ferences in eight items (Table 1). Novice nurses scored sig-

nificantly lower than master and expert nurses on PICO (#1),

sources (#2), treatment design (#3), relevance (#5), significance

(#7), tools (#10), confidence intervals (#12), and design mean-

ing (#14). On the other hand, the mean scores for four items

increased progressively across cohorts from novice to master,

and then from master to expert. These items were treatment

design (#3), relevance (#5), patient preference (#8), and con-

fidence intervals (#12). While not all items performed in this

manner, these items demonstrated mastery of EBP material

across cohorts.

Item Difficulty

Item difficulty (IDI) was calculated via the proportion of nurses

who achieved a passing score for each item (Table 2). Of the

14 items, none were easy (IDI > .8). Ten items (71%) were

moderate (IDI > .3), and 4 (29%) were difficult (IDI < .3;

Janda, 1998; Nunnally & Bernstein, 1994). In testing individual

items, all three cohorts scored below the passing cutoff for five

items: Treatment design (#3), validity (#6), significance (#7),

confidence intervals (#12), and diagnosis design (#13). Novice

and master nurses did not achieve a passing score for relevance

(#5), while novices did not pass patient preferences (#8) and

tools (#10).

Using chi-square analysis, seven items showed significant

differences in the proportion of passing scores between cohorts

(Table 2). Masters scored highest on PICO (#1), significance

(#7), and tools (#10). Experts performed best on treatment de-

sign (#3), design meaning (#14), patient preferences (#8), and

confidence intervals (#12).

In examining item discrimination based on the propor-

tion of nurses who passed the test (Table 2), some significant

items did not discriminate well between masters and experts:

(a) PICO (#1); (b) treatment design (#3); (c) significance (#7);

and (d) design meaning (#14). Items on sources (#3), search

(#4), relevance (#5), validity (#6), and expertise (#9) discrim-

inated on the IDI but did not assess unique EBP knowledge

and skills among the three cohorts (p > .05).

DISCUSSION

The Modified Fresno-Acute Care Nursing test is a 14-item test

for assessing EBP knowledge and skills. While the original

test assessed core principles of EBP steps, this replication val-

idated patient preferences and clinical expertise to fully assess

all EBP domains. The test has excellent content validity with

I-CVIs ranging from .75 to 1.0. Overall scale CVI was .95. In-

ternal consistency was acceptable at .70. Table 3 compares the

psychometric properties of the Modified Fresno-Acute Care

Nursing test with the original and modified tests.

Total scale reliability for the two independent raters was

excellent (.88). IRR for individual items was good to excellent

for 10 of 14 items (71%). One reason IRR may have been lower

for relevance (#5) and validity (#6) was the rubric complexity

that required raters to consider responses for both items when

scoring. Like Tilson (2010), IRR was less than desirable for pa-

tient preference (#8) and clinical expertise (#9). Some leniency

in scoring may have occurred with #8 when a nurse offered a

phrase that could elicit patient preferences, rather than stating

it as a question as specified in the rubric. As recommended by

Tilson (2010), clinical expertise should be retained as it covers

an essential EBP domain, but further revision and validation is

needed.

Item difficulty was moderate to high. Two items retained

from Tilson’s (2010) version had low IDI and ITC: Confidence

intervals (#12) and design for diagnosis (#13). These items were

difficult across cohorts and did not discriminate. Of the new

items, tools (#10) had acceptable psychometrics across ICC,

IDI, and ITC. The second qualitative item (#11) had accept-

able ICC and ITC but low IDI and did not discriminate across

cohorts. This finding may demonstrate that qualitative find-

ings have a rich tradition of emphasis across levels of nursing

education and practice.

While some items did not perform ideally, these items re-

main valuable to the larger research goal of developing an

objective and responsive method to evaluate EBP knowledge

and skills. Reasons for poor item performance may include

item characteristics, unknown sample characteristics, scoring

concerns, or a combination of these factors. Six items (#5, #6,

#9, #11, #12, and #13) need to be revised and retested before be-

ing removed. Although Tilson (2010) dropped clinical expertise

(#9), it covers an important EBP domain that other researchers

recognized as essential for measurement (Miller et al., 2013).

A range in item difficulty is best so that the high and low

range of ability can be evaluated. For item #12 (confidence

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

276

Development of a Modifi ed Fresno Test for Acute Care Nursing

Table 3. Comparison of Reliability and Validity of Fresno Tests

Performance

Measure/acceptable

results

Original Fresno (Ramos

et al., 2003)

Dutch adapted Fresno

(Spek et al., 2012)

Modified Fresno-physical

therapy (Tilson, 2010)

Modified Fresno-Acute Care

Nursing test (Halm, 2018

current study)

Population � Family physicians � Speech

language, clinical

epidemiology

students

� Physical therapy � Acute care nurses

Total score/# items � 212/12 � 212/12 � 224/13 � 232/14

Content validity

� Scale CVI/>.90 � Not reported � .92 � Not reported � .95

Interrater reliability

� Interrater

correlation/

� >.60

� Items: .72–.96

� Total score: .97

� Not reported

� Total score: .99

� Items: .41–.99

� Total score: .91

� Items: .23–.90

� Total score: .88

Internal reliability

� Cronbach’s/>.70

� Item-total

correlation

(ITCs)/>.30

� .88

� .47–.75 (items)

� .83

� .31–.76

� .78

� .20–.66

� .70

� .12–.68

Item discrimination

� Item

discrimination

index (IDI)/>.20

� .41–.86; no items

had weak or

negative

discrimination

� Not reported � .25–.68; no items

had weak or

negative

discrimination

� .04–.74; 3 items had

weak discrimination

Construct validity

� Comparison of

mean cohort

scores

� Novice= 95.6+

� Expert= 147.5;

more passed all

items (p< .05)

� Year 1 students

= 26.3*

� Year 2 students

= 69.3*

� Year 3 students

= 89.1*

� Masters students

= 154.2*

� Novice= 92.8

� Trained= 118.5

� Expert= 149.0++;

more passed 11

items

(p< .03–.01)

� Novices= 96.17++

� Masters= 134.87;

more passed 3 items

(p< .01–.0001)

� Experts= 132.77;

more passed 4 items

(p< .01–.0001)

*p< .05; +p< .001; ++ p< .0001.

intervals), the IDI was low, most likely due to the low base

success rate; however, it did discriminate the high end of EBP

knowledge among cohorts. This item replaced a mathemati-

cal calculation and should be retained because of the growing

importance of understanding confidence intervals, although it

may need to be revised. Similarly, item #13 (design diagnosis)

was difficult. This item should be retained but reworded to in-

crease clarity that it is referring to selection and interpretation

of diagnostic tests.

Item #14 (design meaning) may have been too easy. This

item should be retained but reworded, so it is more difficult.

Since item #11 was labeled qualitative, it may have primed

nurses, and so item #14 (design meaning) should be moved

earlier in the test. Based on ITC performance, the rubric for

item #11 (qualitative) needs to be more difficult, requiring

more specific or unusually helpful or insightful advice to better

differentiate between a best possible (16 points) answer versus

a more limited (8 points) answer.

No floor or ceiling effects were evident, indicating that EBP

knowledge and skills, and not clinical experience, influenced

mean score differences (Tilson, 2010). Mastery of EBP material

was evident from novice to expert nurses on four items. The

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

277

Original Article

Table 4. Uses of the Modified Fresno-Acute Care Nursing Test

Self-assessment Pre–post assessment

Academic settings 1. Students could use individual items and

scoring rubric as a guide when learning each

EBP step/component

2. Educators could periodically take the test

before and after teaching EBP courses to

identify areas for continual learning to

advance levels of EBP expertise

1. Faculty could use pre–post scores to evaluate EBP

education in academic programs (BSN, MSN, DNP,

PhD). Test scores could assist curriculum

design/redesign, and assessment of the quality/

rigor of course content, teaching styles, and

methods

2. Objective test scores could show how student

outcomes are improving, data that can be used for

accreditation purposes

Acute care settings 1. Clinical/advanced practice nurses can use

individual items and scoring rubric as a

guide for learning each EBP

step/component

2. Clinical nurses could take the test to assess

EBP strengths and areas for improvement

before attending EBP educational activities

(Ramos et al., 2003)

1. Acute care educators and researchers could use

pre–post scores to evaluate EBP education for

clinical nurses

� Identified gaps would inform needs for

orientation/ongoing staff development

opportunities that advance EBP competencies

2. Scores could be tracked to monitor EBP

knowledge/skill progression of nurses in attaining

higher levels of EBP competency. A 10% change is

meaningful in evaluating improvement in EBP skills

over time (McCluskey & Bishop, 2009)

� EBP knowledge/skills could be assessed for new

hires, existing nurses, as well as members of

journal clubs, EBP/research and policy/

procedure committees responsible for revising

policies/procedures/protocols/guidelines based

on best available evidence

ability of the test to differentiate between novice nurses and

masters or experts was high but not across all three cohorts.

Historical threats to validity may be one explanation. As an

evolving concept, some nurses may not have had similar ex-

posure to EBP in doctoral education. Interestingly, acute care

nurses had longer times to completion (M 56.43, SD 38.21 for

novices; M 57.20, SD 42.54 for masters; M 43.21, SD 26.33

for experts) than those reported by Tilson (M 33.2, SD 8.7 for

novices; M 34.8, SD 10.0 for masters; M 40.5, SD 15.5 for ex-

perts). These differences may be due to the sample or changes

in the Fresno test.

EVIDENCE TO ACTION

The findings from this sample suggest EBP topics need re-

inforcement with acute care nurses in academic and practice

settings. Acute care nurses at all levels would benefit from

more education on appropriateness of designs for different

research questions, as well as assessment of validity, clinical

and statistical significance, and confidence intervals. Novice

nurses need more guidance in assessing patient preferences

and applicability of tools for practice. Both novice and master

nurses need more education on assessing study relevance. Ar-

eas for EBP education or reinstruction should align with the

national EBP competencies developed by Melnyk et al. (2014)

for clinical and advanced practice nurses. These competencies

provide the road map for expected levels of EBP in the clinical

setting.

Scores derived from the Modified Fresno-Acute Care Nurs-

ing test have many uses in both the academic and prac-

tice setting. As described in Table 4, the test and scoring

rubric can be used as self-study and assessment guides. While

test scores could be used in a pre–post fashion to docu-

ment the impact of educational programs in advancing EBP

knowledge and skills and competencies of acute care nurses,

the Modified Fresno-Acute Care Nursing test needs to un-

dergo further validation before such use occurs in practice or

academia.

LIMITATIONS

The first limitation is the lack of demographic information

for this small U.S. sample. Length of time since graduation

and years of EBP experience were not captured and may have

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

278

Development of a Modifi ed Fresno Test for Acute Care Nursing

influenced performance in the test. The sample of doctorally

prepared nurses who were recruited as EBP experts is a further

limitation because the test did not differentiate well between

experts and masters. Experts spent on average 13 min less time

to complete the test and thus, may not have thoroughly docu-

mented their EBP knowledge. The scores obtained in these

sample cohorts are not generalizable globally to acute care

nurses because the emphasis and amount of EBP education

may differ in general and across levels of nursing education in

developing or developed countries (Ciliska, 2005; Deng, 2015;

Holland & Magama, 2017).

Secondly, the scoring rubric is complex. Raters need EBP

experience and training to ensure reliable use of the rubric.

Pilot testing with opportunities to clarify scoring procedures is

essential for IRR. At least 10–15 min per test should be allocated

(Ramos et al., 2003; Tilson, 2010). This scoring time could be

a limitation if an educator or researcher desires an easy assess-

ment to evaluate competency or effectiveness of EBP education.

The manual grading also increases rater burden, especially if

large volumes of nurses or students will be assessed. Another

limitation was that the raters were not blinded to the cohorts

during scoring. Intrarater reliability was also not performed as

done by Tilson (2010).

RECOMMENDATIONS FOR RESEARCH

The Modified Fresno-Acute Care Nursing test needs further

revision and testing. The Delphi method could be used to en-

gage numerous EBP experts on how to revise items with poor

psychometric performance. These items could then be tested

with larger samples of novice, master, and expert acute care

nurses.

Once validated, test administration should include self-

assessment of EBP expertise because educational level alone

cannot predict level of EBP expertise. Future research should

utilize the test to evaluate the effectiveness of face-to-face ver-

sus online EBP education and to compare teaching pedagogies,

such as didactic versus case study methodologies. Ramos et al.

(2003) suggested other reliable methods be developed to as-

sess application of EBP knowledge and skills in real clinical

scenarios through simulation. Such simulation methods could

be compared with the Modified Fresno-Acute Care Nursing

test to establish further validity.

CONCLUSIONS

Total scores differed significantly across training levels

(p < .0001). Novices scored significantly lower than master

or expert nurses, but differences were not found between the

latter. Total score reliability was acceptable (interrater [ICC

(2, 1)]) = .88. Cronbach’s alpha was 0.70. Psychometric prop-

erties of most modified items were acceptable; however, six

require further revision and testing to meet acceptable stan-

dards. While preliminary psychometric properties for this new

EBP knowledge measure are promising, further validation of

some of the items and scoring rubric is needed. WVN

LINKING EVIDENCE TO ACTION

� Educators in practice and academic settings can

reinforce a variety of EBP topics

� NOVICES: Assessing patient prefer-

ences; evaluating applicability of tools for

practice

� NOVICES & MASTERS: Assessing rel-

evance of studies for PICO question of

interest

� ALL NURSES: Researching designs for

various types of questions; assessing va-

lidity of studies; understanding clinical

versus statistical significance; interpret-

ing confidence intervals

� Align evidence-based education with national EBP

competencies for clinical nurses and advanced

practice nurses (Melnyk et al., 2014)

� Acute care nurses at all levels can use the Modified

Fresno-Acute Care Nursing test as a self-study and

assessment guide.

Author information

Margo A. Halm, Associate Chief Nurse Executive, Nursing Re-

search & Evidence-Based Practice, VA Portland Health Care

System, 3710 SW, Veterans Hospital Road, Portland, OR

Dr. Margo A. Halm, Associate Chief Nurse Executive, Nursing

Research & Evidence-Based Practice, VA Portland Health Care

System, Portland OR. At the time this work was completed, Dr.

Halm served as the Director, Nursing Research, Professional

Practice & Magnet, Salem Health, Salem, OR. The contents of

this article do not represent the views of the US Department of

Veterans Affairs or the US Government.

Address correspondence to Dr. Margo A. Halm, Associate

Chief Nurse Executive, Nursing Research & Evidence-Based

Practice, VA Portland Health Care System, 3710 SW Veterans

Hospital Road, Portland OR; margo.halm@va.gov

Accepted 12 February 2017

Copyright C© 2018, Sigma Theta Tau International

References

Balakas, K., Sparks, L., Steurer, L., & Bryant, T. (2013). An out-

come of evidence-based practice education: Sustained clinical

decision-making among bedside nurses. Journal of Pediatric

Nursing, 28, 479–485.

Castorr, A., Thompson, K., Ryan, J., Phillips, C., Prescott, P., &

Soeken, K. (1990). The process of rater training for observational

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

279

Original Article

instruments: Implications for interrater reliability. Research in

Nursing & Health, 13, 311–318.

Chang, S., Huang, C., Chen, S., Liao, Y., Lin, C., & Wang, H.

(2013). Evaluation of a critical appraisal program for clinical

nurses: A controlled before-and-after study. Journal of Continuing

Education in Nursing, 44(1), 43–48.

Ciliska, D. (2005). Educating for evidence-based practice. Journal

of Professional Nursing, 21(6), 345–350.

Deng, F. (2015). Comparison of nursing education among different

countries. Chinese Nursing Research, 2, 96–98.

Dizon, J., Somers, K., & Kumar, S. (2012). Current evidence on

evidence-based practice training in allied health: A systematic

review of the literature. International Journal of Evidence-Based

Healthcare, 10, 347–360.

Edward, K., & Mills, C. (2013). A hospital nursing research en-

hancement model. Journal of Continuing Education in Nursing,

44(10), 447–454.

Fritsche, L., Greenhalgh, T., Falck-Ytter, Y., Neumayer, H., & Kunz,

R. (2002). Do short courses in evidence-based medicine improve

knowledge and skills? Validation of Berlin questionnaire and

before and after study of courses in evidence based medicine.

BMJ, 325, 1338–1341.

Gardner, A., Smyth, W., Renison, B., Cann, T., & Vicary, M. (2012).

Supporting rural and remote area nurses to utilise and conduct

research: An intervention study. Collegian, 19, 97–105.

Halm, M. (2014). Science-driven care: Can education alone get us

there by 2020? American Journal of Critical Care, 23(4), 339–343.

Holland, S., & Magama, M. (2017). Evidence based practice trans-

lated through global nurse partnerships. Nurse Education in

Practice, 22, 80–82.

Institute of Medicine. (2001). Crossing the quality chasm: A new

health system for the 21st century. Washington, DC: National

Academies Press.

Institute of Medicine. (2007). Roundtable on evidence-based

medicine: The learning healthcare system: Workshop summary. In

L. Olsen, D. Aisner & J. McGinnis (Eds.). Washington, DC:

National Academies Press. Retrieved from www.ncbi.nlm.nih.

gov/books/NBK53483

Janda, L. (1998). Psychological testing: Theory and applications. Need-

ham Heights, MA: Allyn & Bacon.

Jonsson, A., & Svingby, G. (2007). The use of scoring rubrics:

Reliability, validity and educational consequences. Educational

Research Review, 2, 130–144.

Lai, N., & Teng, C. (2011). Self-perceived competence correlates

poorly with objectively measured competence in evidence based

medicine among medical students. BMC Medical Education,

11(1), 1. https://doi.org/10.1186/1472-6920-11-25

Leung, K., Trevana, L., & Waters, D. (2014). Systematic review of

instruments for measuring nurses’ knowledge, skills and atti-

tudes for evidence-based practice. Journal of Advanced Nursing,

70(10), 2181–2195.

McCluskey, A., & Bishop, B. (2009). The adapted Fresno test of

competence in evidence-based practice. Journal of Continuing

Education in the Health Professions, 29(2), 119–126.

Melnyk, B., Gallagher-Ford, L., Long, E., Long, L., & Fineout-

Overholt, E. (2014). The establishment of evidence-based prac-

tice competencies for practicing registered nurses and advanced

practice nurses in real-world clinical settings: Proficiencies to

improve healthcare quality, reliability, patient outcomes, and

cost. Worldviews on Evidence-Based Nursing, 11(1), 5–15.

Miller, A., Cummings, N., & Tomlinson, J. (2013). Measurement

error and detectable change for the modified Fresno test in

first-year entry-level physical therapy students. Journal of Allied

Health, 42(1), 169–174.

Nesbitt, J. (2013). Journal clubs: A two-site case study of nurses’

continuing professional development. Nurse Education Today,

33, 896–900.

Nunnally, J., & Bernstein, I. (1994). Psychometric theory. New York,

NY: McGraw-Hill.

Polit, D., & Beck, C. (2007). The content validity index: Are you

sure you know what’s being reported? Research in Nursing &

Health, 29, 489–497.

Polit, D., & Beck, C. (2012). Nursing research: Generating and assess-

ing evidence for nursing practice. Philadelphia, PA: Lippincott.

Ramos, K., Schafer, S., & Tracz, C. (2003). Validation of the Fresno

test of competence in evidence based medicine. BMJ, 326, 319–

321.

Sciarra, E. (2011). Impacting practice through evidence-based edu-

cation. Dimensions of Critical Care Nursing, 30(5), 269–275.

Shaneyfelt, T., Baum, K., Bell, D., Feldstein, D., Houston, T., Kaatz,

S., . . . Green, M. (2006). Instruments for evaluating education

in evidence-based practice. Journal of the American Medical Asso-

ciation, 296, 1116–1127.

Spek, B., de Wolf, G., van Dijk, N., & Lucas, C. (2012). Develop-

ment and validation of an assessment instrument for teaching

evidence-based practice to students in allied health care: The

Dutch modified Fresno. Journal of Allied Health, 41(2), 77–82.

Tilson, J. (2010). Validation of the modified Fresno test: Assess-

ing physical therapists’ evidence based practice knowledge and

skills. BMC Medical Education, 10, 1–9.

Toole, B., Stichler, J., Ecoff, L., & Kath, L. (2013). Promoting nurses’

knowledge in evidence-based practice. Journal for Nurses in Pro-

fessional Development, 29(4), 173–181.

Wendler, M., Samuelson, S., Taft, L., & Eldridge, K. (2011). Re-

flecting on research: Sharpening nurses’ focus through engaged

learning. Journal of Continuing Education in Nursing, 42(11), 487–

493.

White-Williams, C., Patrician, P., Fazell, P., Degges, M., Graham,

S., Andison, M., . . . McCaleb, A. (2013). Use, knowledge, and

attitudes toward evidence-based practice among nursing staff.

Journal of Continuing Education in Nursing, 44(6), 246–254.

doi 10.1111/wvn.12291

WVN 2018;15:272–

280

SUPPORTING INFORMATION

Additional supporting information may be found online in the Supporting Information section at the end of the article.

Figure S1. MODIFIED FRESNO TEST – ACUTE CARE NURSING (14-item), with Scoring Rubric

Worldviews on Evidence-Based Nursing, 2018; 15:4, 272–280.

© 2018 Sigma Theta Tau International

280

Development of a Modifi ed Fresno Test for Acute Care Nursing

Continuing Education Worldviews on Evidence-Based Nursing is pleased to offer readers the opportunity to earn

credit for its continuing education articles. Learn more here: https://www.sigmamarketplace.org/journaleducation

Essay Writing Service Features

Our Experience

No matter how complex your assignment is, we can find the right professional for your specific task. Achiever Papers is an essay writing company that hires only the smartest minds to help you with your projects. Our expertise allows us to provide students with high-quality academic writing, editing & proofreading services.

Free Features

Free revision policy

$10Free bibliography & reference

$8Free title page

$8Free formatting

$8How Our Dissertation Writing Service Works

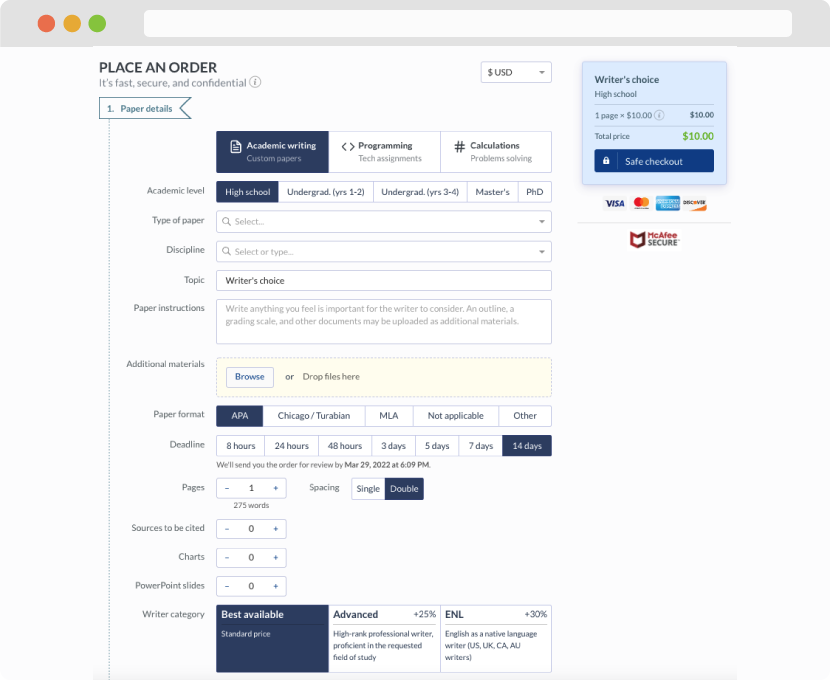

First, you will need to complete an order form. It's not difficult but, if anything is unclear, you may always chat with us so that we can guide you through it. On the order form, you will need to include some basic information concerning your order: subject, topic, number of pages, etc. We also encourage our clients to upload any relevant information or sources that will help.

Complete the order form

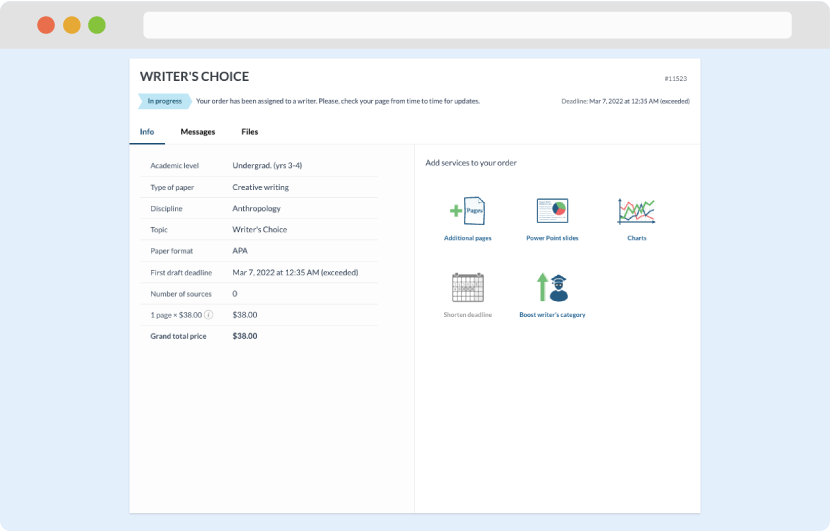

Once we have all the information and instructions that we need, we select the most suitable writer for your assignment. While everything seems to be clear, the writer, who has complete knowledge of the subject, may need clarification from you. It is at that point that you would receive a call or email from us.

Writer’s assignment

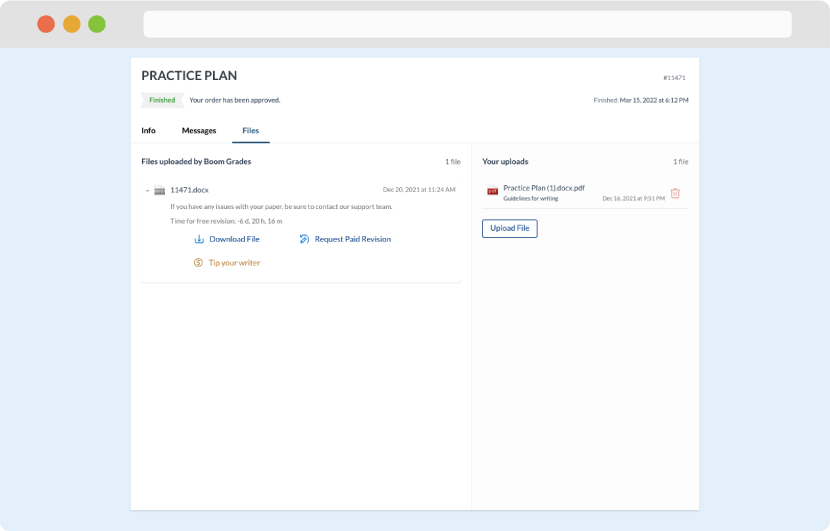

As soon as the writer has finished, it will be delivered both to the website and to your email address so that you will not miss it. If your deadline is close at hand, we will place a call to you to make sure that you receive the paper on time.

Completing the order and download