Prior to beginning work on this assignment, review your textbook readings covered thus far and the

Occupational Outlook Handbook: Healthcare Occupations (Links to an external site.)

.

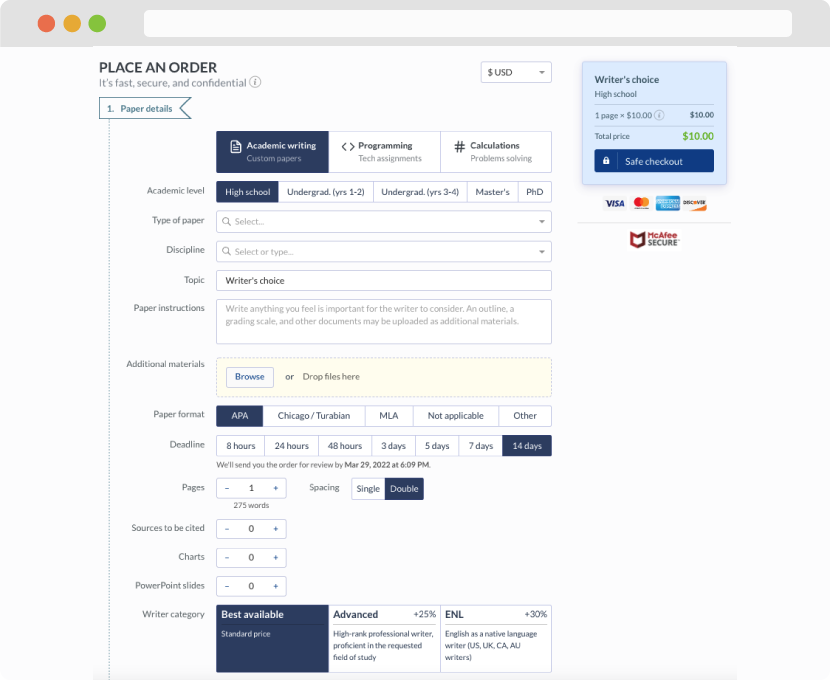

This assignment is the first part of a comprehensive presentation you will develop on the U. S. health care system. For this assignment, you will provide an overview of the U.S. health care system. Follow the instructions below to complete the assignment.

Introduction:

Begin your presentation by including a title slide (see specifics below). In the speaker’s notes of this slide, include your introductory information, which will include your degree plan and any health care experience you have had or share your qualifications related to the information you are presenting. If you have no health care experience, you can be creative with professional experience.

Next, create an overview slide that describes the required components to be covered within the presentation. Add bulleted points for each of the topics being covered. Briefly describe each bulleted point in the speaker’s notes.

Content:

The remaining slides will address the content of the presentation and the references. The content will address the following required components:

Refer to the time line simulation Global Perspectives: Shifts in Science and Medicine That Changed Healthcare (Links to an external site.) reviewed in Week 1. Chapter 2 in your textbook discusses the evolution of our health care system and is a good resource for this part of the presentation as well.

Evaluate each stakeholder’s effect on the health care system by discussing their purpose and impact.

Include examples of both positive and negative impacts made by your chosen stakeholders (e.g., a negative contribution is when a patient uses the emergency room for nonurgent care).

Consider using the

PowerPoint Instructions Handout

to locate linked resources for properly making a PowerPoint presentation. Also consider these help tools:

PowerPoint Best Practices

,

Don McMillan: Life After Death by PowerPoint (Links to an external site.)

.

Wikimedia Commons (Links to an external site.)

can also help you explore creative commons images. You may also want to review

What Is CRAAP? A Guide to Evaluating Web Sources (Links to an external site.)

.

Submit your assignment via the classroom to the Waypoint Assignment submission button by Day 6 (Sunday) no later than 11:59 p.m.

APA Requirement Details:

The U.S. Health Care Presentation: Part 1 Assignment

Title of presentation

Student’s name

Course name and number

Instructor’s name

Date submitted

For further assistance with the formatting and the title page, refer to APA Formatting for Word 2013 (Links to an external site.).

The Scholarly, Peer-Reviewed, and Other Credible Sources (Links to an external site.) table offers additional guidance on appropriate source types. If you have questions about whether a specific source is appropriate for this assignment, please contact your instructor. Your instructor has the final say about the appropriateness of a specific source for a particular assignment.

2.1U.S. Healthcare Before 1900

The practice of medicine before the 20th century was performed by independent,

unorganized, and poorly educated individuals who relied mostly on folk remedies to treat

their patients. By the mid-1800s, hospitals, pharmaceuticals, and training standards for

doctors emerged and gained acceptance. The Civil War ushered in significant changes in

new medical procedures and practices that changed the course of medical history.

Mary Eastman/Newberry Library/SuperStock

As depicted in this Seth Eastman painting (1853), colonial medicine relied primarily on medieval

concepts of the body, which contrasted sharply with the sophisticated holistic remedies of native

cultures.

Early American Medicine (1700s)

During the Colonial period in the United States (1700–1763), the practice of medicine was

primitive, as was the healthcare provided to the early settlers. Medical practices differed

sharply between the European settlers and native cultures. Native Americans had a

sophisticated collection of herbal remedies, many of which became the foundation of

modern treatments. By contrast, the European colonists practiced medicine that relied on

medieval concepts of the human body.

Colonial America was a period of “heroic medicine.” Aggressive treatments such as

bleeding, purging, and blistering occupied a central place in therapeutics. Bleeding patients

until unconsciousness, followed by heavy doses of mercurous chloride until salivation, was

a popular treatment. Despite attempts to make the practice of healthcare more

professional, medicine splintered into different philosophies, making it difficult for doctors

to command the authority they desired (Starr, 1982, pp. 30–55).

In philosophy and practice, Western medicine and Native American medicine remained

divergent. Table 2.1 contrasts basic characteristics of Western and Native American

medicine. Western medicine arrived with Europeans, who brought increased scientific

understanding and more sophisticated medical practices. Western practitioners focused on

the illness and used scientific therapies to rid the body of disease. The physician was

considered the authority figure and healer. Native American medicine, on the other hand,

was holistic, focusing on the patient and involving the patient in the healing process.

In the 20th century, the practice of medicine has been dominated by an evidence-based

Western approach. Only recently has holistic medicine regained some creditability as a

viable alternative to traditional Western medicine. Refer to Chapter 7 for a discussion of

alternative healthcare.

https://content.ashford.edu/books/Batnitzky.5231.18.1/sections/sec7.1#sec7.1

Table 2.1: Comparison of the

characteristics of Western

and

Native American medicine

Western medicine Native American medicine

Focus on pathology and curing disease. Focus on health and healing the person and

community.

Reductionist: Diseases are biological, and

treatment should produce measurable

outcomes.

Complex: Diseases do not have a simple

explanation, and outcomes are not always

measurable.

Adversarial medicine: “How can I destroy

the disease?”

Teleological medicine: “What can the

disease teach the patient? Is there a

message or story in the disease?”

Investigate disease with a “divide-and-

conquer” strategy, looking for microscopic

cause.

Looks at the big picture: the causes and

effects of disease in the physical, emotional,

environmental, social, and spiritual realms.

Intellect is primary. Medical practice is

based on scientific theory.

Intuition is primary. Healing is based on

spiritual truths learned from nature, elders,

and spiritual vision.

Physician is an authority. Healer is a health counselor and advisor.

Legitimacy is based on credentials and

licensure.

Legitimacy is based on behavior and

reputation for spiritual power.

Subject to review, regulation, and sanction

by licensing boards and the State.

Healers accountable to elders,

communities, and tribal justice systems.

Fosters dependence on medication,

technology, and pharmaceuticals.

Empowers patients with confidence,

awareness, and tools to help them take

charge of their own health.

High medical costs. There is no fixed fee for services; the healer

achieves status through generosity.

Health history focuses on patient and

family: “Did your mother have cancer?”

Health history includes the environment:

“Are the salmon in your rivers ill?”

Intervention should result in rapid cure or

management of disease.

Patience is paramount. Healing occurs

when the time is right.

Source: Adapted from K. Cohen’s Honoring the

medicine: The essential guide to Native American

healing (New York: Ballantine Books, 2003).

Reprinted with permission of the author.

During the Colonial period, anyone could claim to be a doctor. No standards existed for

entry into the profession or for medical education. Many physicians lacked self-confidence

and in general, the populace did not esteem them. Pioneer Medicine in Virginia mentions

regulatory bills from 1639 describing physicians as “avaritious and gripeing practitioners

of phisick and chirurgery” who charged immoderate fees for their services (as cited in

Vogel, 1970, pp. 113–114). This attitude continued well into the 1800s, with many white

Europeans continuing to use lay healers and even native shamans because of their mistrust

and even hostility toward the medical profession. Although a few eminent doctors made

handsome fortunes, most were unable to make a living practicing medicine.

Science and Society/SuperStock

Prior to the establishment of education and accreditation standards, doctors were free to sell their

“remedies” to passers-by on the street.

The movement toward health professionalism took a dramatic leap after the founding of

Pennsylvania Hospital in the early 1750s, the first mental hospital for assisting the insane

and the charter of the first medical school in Philadelphia in 1765. By the time of the

American Revolution, the country had 3,500 to 4,000 physicians, 400 of whom had formal

medical training; perhaps half of these held medical degrees (Starr, 1982).

First Marine Hospital

The Marine Hospital was one of the first signs of medical organization, as well as one of the

first publicly funded, pre-paid health insurance programs in U.S. history. President John

Adams signed the Marine Hospital Fund into law on July 16, 1789. The law provided care

for ill and disabled seamen in the U.S. Merchant Marine and Coast Guard, along with other

federal beneficiaries, at several hospitals located at sea and river ports.

Pilots, captains, cooks, pursers, engineers, stevedores, roustabouts, and deckhands were

eligible for treatment and care. In addition to government support, the seamen were

required to contribute twenty cents a month for their future hospital care. Besides

providing healthcare, these Marine Hospitals acted as gatekeepers against the port entry of

pathogenic diseases.

The Marine Hospital program continued with little change until the outbreak of the Civil

War, at which point 27 hospitals were in operation around the country. After the war,

President Ulysses S. Grant moved operations of the Marine Hospital under the Military

Department. As the first Supervising Surgeon, Dr. John Maynard Woodworth took charge of

the Marine Hospital Service. Based on his Civil War experience, Dr. Woodworth adopted

new standards of hygiene, set up decontamination procedures, standardized medications,

and established nutritional regimes.

Many of America’s modern healthcare systems evolved from the Marine Hospital Service,

which was also the origin of the Public Health Service, National Institutes of Health (NIH),

the Centers for Disease Control and Prevention (CDC), and the Indian Health Services (U.S.

Marine Hospital, n.d.; U.S. National Library of Medicine, 2010).

Medicine in the 1800s

Until the mid-19th century, doctors were free to make up medicine as they went along,

borrowing a medical page from any source. However, several events combined to make the

1800s a period of increased control of American healthcare. Most important to the actual

practice of medicine was the Civil War. (See the section, Impact of the Civil War on

American Medicine.) In addition to the war, the growth of cities led public health officials to

improve sanitation methods as a way to prevent the spread of communicable disease, and

the founding of the American Medical Association (AMA) attempted to define

educational standards for physicians.

American Medical Association

Despite government’s early attempts to bring some order to healthcare with the

establishment of the Marine Hospital Service, American healthcare remained in a state of

chaos during the nineteenth century. Physicians were unsure of both their ability to offer

effective cures and to make a living as a doctor. Quack remedies flourished.

No standards of medical education or accreditation existed until 1845 when Nathan S.

Davis, MD, not yet 30 years old, introduced a resolution to the New York Medical Society

endorsing the establishment of a national medical association to “elevate the standard of

medical education in the United States” (American Medical Association [AMA], 2014b, para.

2). Along with others, Davis founded the AMA in 1847 at the Academy of Natural Sciences

in Philadelphia (AMA, 2014b). The goals of this new organization were

• to promote the art and science of medicine for the betterment of the public health,

• to advance the interests of physicians and patients,

• to promote public health,

• to lobby for legislation favorable to physicians and patients, and

• to raise money and set standards for medical education.

One of the AMA’s first initiatives established a board to examine suspect remedies and

other forms of treatment (such as homeopathic, osteopathic, and chiropractic) that the

organization deemed “bad medicine” and to inform the public regarding the danger of

these practices (AMA, 2014a). Despite the AMA’s claims that its purpose was to support

medical progress, critics argued that the organization acted more like the medieval guilds

that represented artisans and merchants in its efforts to increase physicians’ wages, control

the supply of physicians, and prevent nonphysicians from practicing any form of

healthcare. In 1870, in an effort to unify and strengthen the profession, the AMA accepted

homeopaths and eclectics into its medical fold.

The 1910 Flexner Report (a study of medical education in the United States and Canada)

found that many medical schools were nothing more than diploma mills, a conclusion

which prompted new and stricter standards for medical training. The AMA advocated a

minimum of four years of high school, four years of medical school, and the passage of a

licensing test. As a result, by 1915 the number of medical schools had fallen from 131 to 95

and graduates from 5,440 to 3,536 (Starr, 1982, pp. 118–121). By 1920, 60% of the

physicians in the country were members of AMA. Thus the AMA played a significant role in

the beginning of organized medicine, as well as the rise to power and authority of the

medical profession (Collier, 2011). Since the beginning of the 20th century, membership in

the AMA has declined. As of December 31, 2011, only 15% of the 954,000 practicing

physicians were members of AMA (Collier, 2011).

Impact of the Civil War on American medicine (1861–1865)

About the same time that the AMA was struggling to reform the practice of medicine, the

United States entered into one of the costliest and bloodiest conflicts in its short history,

the Civil War. The small staff of army doctors had no idea how to deal with large-scale

military medical and logistical problems, such as widespread disease among the troops,

camp overcrowding, and unsanitary conditions in the field. In addition, surgeons—

unaware of the relationship between cleanliness and infection—did not sterilize their

equipment. Infection following surgery was a common problem (Floyd, 2012).

In spite of the devastation, the war’s influence on American medicine and its system of

healthcare proved both lasting and beneficial. The Army medical corps increased in size,

improved its techniques, and gained a greater understanding of medicine and disease,

which led to a new era in modern medicine. The United States Sanitary Commission,

created in June 1861, led the fight to improve sanitation. The Commission built large well –

ventilated hospital tents and more permanent, cleaner pavilion-type hospitals. Doctors

became more adept at surgery and the use of anesthesia. They now recognized that

enforcing sanitary standards in the field could reduce the spread of disease. Another

important advance occurred when women were encouraged to join the newly created

nursing corps. Respect for the role of women in medicine rose considerably among both

doctors and patients. Clara Barton, the Civil War’s most famous nurse, established the

American Red Cross in 1881 (Civil War Society, 1997; Sohn, 2012). Crews that were

organized to take wounded soldiers to battlefield hospitals in specialized wagons became

the nation’s first ambulance corps. The war contributed significantly to the development of

an established and organized healthcare system.

Before the Civil War, most people requiring medical care were treated at home. After the

war, hospitals adapted from the battlefront model cropped up across the country, the

direct ancestor of today’s medical centers. Battlefield experience gave surgeons the

experience needed to perform risky surgeries. After the war, military physicians such as Dr.

John Shaw Billings and Dr. John Maynard Woodworth became leaders of the American

Public Health Association (APHA), which was founded in 1872 by Dr. Stephen Smith to

focus on improving public health.

Early hospitals

Before the Civil War, many hospitals were more concerned with religion and morals than

health. Maintained by volunteers and trustees, they depended primarily upon charity for

their existence. As a result, hospitals typically served as the last resort for the infirm, the

mentally and physically disabled, and the homeless, while wealthier patients received

home care from their private physicians. It was rare for an American doctor to set foot in a

hospital ward where a patient had a better chance of dying than leaving alive.

In 1752, Pennsylvania Hospital opened in Philadelphia, becoming the United States’ first

permanent general hospital to care for the sick. Financed by voluntary donations, New York

Hospital opened in 1792 and Boston’s Massachusetts General Hospital in 1821.

By the end of the Civil War, with the discovery of anesthesia and advancements in

diagnostic medical devices and surgical procedures, hospitals had become safer places.

Surgeons prepared before surgery and cleaned their instruments with antiseptics.

Anesthesia allowed for slower and more careful surgeries, which were performed earlier in

the course of disease and included a variety of previously inoperable conditions such as

appendicitis, gall bladder disease, and stomach ulcers (Rapp, 2012). With the introduction

of fees in the 1800s, hospitals began to offer more effective treatments and ones that the

middle and upper classes viewed as more useful. Physicians, eager to enhance their

education and increase the size of their private practices, vied for hospital positions. As a

result, hospitals moved from the fringes of medicine to become large businesses and

educational institutions focused on doctors and patients rather than on patrons and the

poor. With the advent of urbanization and new modes of transportation, hospitals became

more accessible and created more opportunities for physicians to increase their prestige

and income (Starr, 1982, pp. 146–162).

The Civil War had been instrumental in establishing a system of healthcare in the United

States. As the country came of age at the turn of the 20th century, breakthroughs in

medicine and technology ushered in a new era of healthcare. The promise of better

methods of diagnosis and treatment and newly discovered drugs changed the medical

landscape. Even as medical advances increased the quality of care and life expectancy, an

expanding healthcare system and increased population presented new challenges for both

the medical establishment and the government, which began to concern itself with public

health—and access to care–on a broad scale. As the country’s population shifted from rural

to urban areas, new health crises arose that demanded effective public health policies. The

Great Depression (1929–1941) further altered healthcare, as millions of people found

themselves without the means to pay for care. Franklin D. Roosevelt’s administration

attempted to pass a form of universal healthcare but settled for the Social Security

program. Nonetheless, the near-passage of a national health insurance program

spearheaded the development of private health insurance and Blue Cross.

Bettmann/Corbis/Associated Press

Following events such as the influenza pandemic of 1918 and 1919, public health measures applied

the science of bacteriology, isolated patients, and disinfected contaminants.

Public Health Advances

In 1872 Dr. Stephen Smith helped found the American Public Health Association (APHA).

The objective of this new organization was to protect all Americans and their communities

from preventable, serious health threats, especially communicable diseases. Using

knowledge derived from the Civil War about disease prevention, along with recent

advances in bacteriology, APHA advocated adopting the most current scientific advances

relevant to health and education in order to improve the health of the public and to develop

health departments at both the federal and local levels. The Department of Public Health,

established by the U.S. Congress in 1879, was a direct outgrowth of the Marine Hospital

Service’s original charge of controlling major epidemic diseases through quarantine and

disinfection measures, as well as immunization programs at ports of entry.

The Public Health Service (PHS), and eventually the Department of Health and Human

Services, continued the services of the Department of Public Health and eventually

expanded to included eight major health agencies (Figure 2.1).

The first phase of public health emphasized environmental sanitation to stop the spread of

epidemic diseases. The second witnessed the first application of bacteriology and

emphasized isolation and disinfection. Both of these phases were a response to the deadly

influenza pandemic, which decimated much of the globe between 1918 and 1919. In the

United States alone, influenza affected a quarter of the population and decreased average

life expectancy by 12 years. From a population of 105 million, 675,000 people died from

the disease (U.S. Department of Health and Human Services [HHS], 2013e).

Figure 2.1: The U.S. Public Health Services

Public health organizations such as those within the Department of Health and Human Services

continue to uphold their founding goals: to help protect communities against health threats.

Source: Adapted from U.S. Department of Health and Human Services (HHS) (n.d.), HHS organizational chart.

Retrieved from http://www.hhs.gov/about/orgchart/

The concepts of bacteriology and public health ushered in a new era of healthcare.

Physicians began to rely less upon drugs as treatments and more on hygiene. Major

advances in bacteriology and immunology lead to vaccines and treatments for typhoid,

tetanus, diphtheria, dysentery, and diarrhea. These advances also accounted for a

significant fall in mortality and a general rise in life expectancy (Starr, 1982, pp. 134–140).

These improvements in medical care, as well as large-scale public health innovations—

clean water technologies, sanitation, refuse management, milk pasteurization, and meat

inspection—are credited with a dramatic and historic increase in life expectancy, from 47

to 63 years (Cutler & Miller, 2004, p. 4, para. 1).

http://www.hhs.gov/about/orgchart/

Beginnings of Health Insurance

The concept of health insurance predates the Civil War. As early as 1847, Massachusetts

Health Insurance of Boston offered group policies that provided accident insurance for

injury related to travel by railroad and steamboat, as well as other benefits. The

Association of Granite Cutters union offered the first national sick benefit plan (Zhou,

2009).These plans eventually broadened to include coverage for all disabilities from

sickness and accidents. Still, the populace was slow to adopt health insurance, as a lack of

effective treatments made it largely unnecessary (Thomasson, 2003).The main purpose of

“sickness insurance” was to make up for lost wages from missing work, which at the time

was significantly greater than the cost of healthcare. The passage of the National Insurance

Act in 1911 in England firmly established the term, and the concept, of “health insurance”

(Murray, 2007).

As insurance plans grew in popularity, employee-based benefit plans (often enlarged

through the power of labor unions) began to appear and grow with government

encouragement. Metropolitan Life Insurance Company began a plan for its employees in

1914; in 1928 General Motors signed a contract with Metropolitan to cover its employees

as well (Starr, 1982, pp. 200–209, 241–242, 294–295). Other forms of insurance were

provided by specialized industries (railroad, mining, and lumber companies), fraternal

orders (lodge practice), and private clinics such as the Mayo Clinic in Rochester,

Minnesota, and the Menninger Clinic in Topeka, Kansas.

In 1929, a group of teachers in Dallas contracted with Baylor University Hospital to provide

a set number of hospitalization days in exchange for a fixed payment. With encouragement

from the American Hospital Association (AHA), more hospitals began to develop similar

arrangements and to compete with one another to provide prepaid hospital services.

Eventually the plans merged under the sponsorship of the AHA, subsequently adopting the

name Blue Cross. Other insurers who wished to join their plans with Blue Cross were

required to allow subscribers free choice of physician and hospital. Unlike the Blue Cross

plans of today, these early plans operated as nonprofit corporations, and as such, they

benefitted from tax-exempt status and freedom from insurance regulation. Meanwhile,

physicians and AMA members—weary of the loss of autonomy and concerned that the

contemporary Social Security legislation would lead to compulsory health insurance—

developed their own prepaid plans under the umbrella of Blue Shield. Patients insured

through Blue Shield paid the difference between a set plan payment and the actual charges

(Thomasson, 2003). Blue Shield worked like insurance; Blue Cross was more of a

prepayment plan.

These early forms of health insurance forestalled any potential for national health

insurance, bonded the employee to the employer, and stabilized the financing of the rising

healthcare industry.

Disease-Focused Medicine

After Congress passed a series of laws in 1937 to promote cancer research, an alignment of

the lay public and medical research establishment promoted disease-focused medicine.

Under the domain of the National Institutes of Health, the National Cancer Institute (NCI)

made research grants to outside researchers. Mary Lasker, a wealthy lay lobbyist, took an

active role in NCI, eventually renaming the organization the American Cancer Society. The

Lasker group used advertising to raise funds for cancer research. In like manner, the

creation of the March of Dimes organization and its fundraising through advertising

benefitted the National Foundation for Infantile Paralysis and raised more money for

research than any other health campaign. Part of the money raised by the March of Dimes

went toward James Watson and Francis Crick’s discovery of the double-helical structure of

DNA (Starr, 1982, pp. 338–347; Stevens, Rosenberg, & Burns, 2006, pp. 180–181).

Mary Lasker encouraged doctors and research scientists to ask for huge sums of money—

and Congress obliged, becoming the go-to source of funding for medical researchers.

Between 1941 and 1951, the federal budget for medical research rose from $3 million to

$76 million. Today there are an estimated 3,100 disease-specific interest groups and

illness-based lobbies (Stevens, Rosenberg, & Burns, 2006, p. 35).

Social Security

After the Civil War and before the Great Depression, the United States experienced

significant demographic and social changes that eroded the foundation of the average

American’s economic security. The Industrial Revolution transformed the majority of

working people from self-employed agricultural workers into wage earners in large

industrial concerns that at any time could lay off workers or go out of business. By 1920,

more people were living in cities than on farms, mostly for employment reasons. With the

migration to the cities, the concept of taking care of extended family members too old or

infirm to work disappeared. In addition, Americans were living longer thanks to better

healthcare, sanitation, and public health programs (Social Security Administration, 2013b).

The Beginnings of Social Security

The Great Depression (1929–1945) prompted the federal government to address the

growing problem of economic security for the elderly. The result was the Social Security

Act of 1935, signed into law by President Franklin D. Roosevelt. This landmark social

insurance system provided benefits to retirees and the unemployed. Financed by a payroll

tax on current workers’ wages, half was directly paid as a payroll tax and half was paid by

the employer. Included in the Social Security Act was Title IV, which provided grants to

states for aid to dependent children (Social Security Administration, 2013a).

Prior to the Social Security Act, the similar Civil War Pension program had provided

benefits to disabled soldiers, as well as widows and orphans of deceased soldiers. Old age

became a sufficient qualification for Civil War pension benefits in 1906 (Social Security

Administration, [SSA],2013b). Three decades later, the Social Security Act passed by a wide

margin in both houses of Congress. For the first time in history, the government was the

major party responsible for social welfare. The program initially contained provisions for

government-sponsored compulsory health insurance, but the provision was removed

because of expected resistance from the AMA. Following the passage of the National Labor

Relations Act (Wagner Act) that same year, labor unions earned the right to use collective

bargaining to obtain healthcare coverage (Starr, 1982, pp. 268, 311, 312).

The first payments under Social Security were made as a lump sum to those who

contributed to the program but who would not participate long enough to be vested for

monthly benefits. Beginning in January 1940, however, payments were monthly checks,

payable to retired workers or their eligible spouses, children, or surviving parents. At age

65 Ida May Fuller of Ludlow, Vermont, received the first monthly retirement check for

$22.54 (Social Security Administration, 2013b).

World War II (1941–1945) and Its Aftermath

Although war is a time of hardship, the end of war often brings major innovations in

science and technology, as well as changes in cultural and social values. The end of World

War II—like the end of the Civil War and World War I—heralded significant advances in

American society. By 1945, health insurance was common. President Harry S. Truman’s

health initiatives had resulted in the Hill–Burton program, legislation that earmarked

funds for improving the nation’s hospitals and initiated a hospital construction boom. On

the health development front, diseases were being studied methodically, leading to

improved prevention, containment, and treatment. Meanwhile, surgery developed as a

specialty, and imaging technology opened up new ways to view the body. As the country

witnessed the rise of corporate and government control of medicine, reformers laid the

groundwork for President Lyndon Johnson’s Great Society and its cornerstones: Medicare

and Medicaid (discussed later in this chapter).

Centers for Disease Control and Prevention (CDC)

During World War II, malaria infected many of the soldiers training in the south. The Office

of Malaria Control in War Areas (MCWA) was founded to fight malaria by killing the

mosquito carriers. In 1946, Joseph W. Mountin, a physician and public health leader,

converted the MCWA into a peacetime agency—the Communicable Disease Center (CDC)—

to monitor and control infectious diseases. Located in Atlanta, Georgia, the CDC was part of

the U.S. Public Health Service and had approximately 400 employees and a budget of

approximately $10 million (Centers for Disease Control and Prevention [CDC], 2013b).

The CDC’s major mission was to collect, analyze, and disseminate disease data to public

health practitioners. Its primary responsibilities were to protect, educate, and promote

health and safety for the public and government. Over time, its functions grew to include a

wide range of preventable health problems, including infectious diseases and epidemics,

primarily poliomyelitis and influenza at the time (CDC, 2013b; Tharian, 2013).

In 1970 the Communicable Disease Center was renamed the Centers for Disease Control

and Prevention to reflect a more global mission of health protection, prevention, and

preparedness. Malaria, once considered a threat to the country’s security, has been

overshadowed by other health issues, including birth defects, West Nile virus, obesity,

avian and pandemic flu, Escherichia coli, automobile accidents, and bioterrorism, as well as

sexually transmitted diseases, cancer, diabetes, obesity, heart disease, AIDS, environmental

health threats, and threats from biological warfare, to name a few (Koplan, 2002). The CDC

headquarters houses one of two repositories in the world for the smallpox virus.

National Institute of Mental Health (NIMH)

Before World War II, in-house professionals at state psychiatric hospitals treated mental

health patients who resided in the hospital. Conditions were less than ideal, and eventually

there were calls for reform of the asylum-based mental healthcare system (Novella, 2010).

Mental illness gained recognition as a significant public health problem when more than

one million men were rejected from military service during World War II because of mental

and psychoneurotic disorders, and another 850,000 were hospitalized as psychoneurotic

cases. In response, President Truman called for, and Congress authorized, large sums of

money for psychiatric training and research that ultimately gave rise to the founding of the

National Institute of Mental Health (NIMH) as part of the National Institutes of Health (NIH)

in 1949. Of the various divisions of NIH, none grew faster than NIMH. Soon after the war,

child development, juvenile delinquency, suicide prevention, alcoholism, and television

violence came under the scrutiny of NIMH (Starr, 1982, p. 346).

In 1961, the Joint Commission on Mental Illness and Health issued a report titled Action for

Mental Health calling for a national program to meet the needs of the mentally ill. In the

mid-1960s, the NIMH established centers for research on schizophrenia, child and family

mental health, and suicide, as well as crime and delinquency, minority group mental health

problems, urban problems, and later, rape, aging, and technical assistance to victims of

natural disasters. The increasing recognition of the problems associated with alcohol abuse

led to the creation of the National Center for Prevention and Control of Alcoholism as par t

of NIMH in the mid-1960s and eventually to the establishment of the Center for Studies of

Narcotic and Drug Abuse.

In 1968, NIMH became a component of the Public Health Service’s Health Services and

Mental Health Administration (HSMHA), later to become the Alcohol, Drug Abuse, and

Mental Health Administration (ADAMHA) in 1974. The first major breakthrough in NIMH

research was the discovery of noradrenaline, which plays a significant role in manic–

depressive illness (bipolar disorder). Shortly after, based on this research, the Food and

Drug Administration (FDA) approved the use of lithium as a treatment for mania (National

Institute of Mental Health [NIMH], 1999).

Drugs That Changed Healthcare

The 20th century was the era of chemical medicine. Large international pharmaceutical

companies developed potent chemicals to treat or control infection, hypertension, diabetes,

cancer, and many other ailments, which improved quality of life as well as life expectancy

(now 75 to 85 years). However, it is important to understand that life expectancy is an

average; much of the improvement in life expectancy during this period was due to a

reduced risk of infant mortality, as well as improvements in public health, not just new

drug treatments.

Table 2.2 describes some of the drugs that changed medical care and society in general.

Table 2.2:

Drug

discoveries

that changed

medicine

Drug Type Year of

discovery

Discovered/

Perfected

Treatment

for

Morphine analgesic 1804 Friedrich

Wilhelm Adam

Sertürner

(Germany)

pain

Ether anesthetic 1846 William Morton

(U.S.)

pain

Aspirin acetylsalicylic

acid

1853 Charles

Frederic

Gerhardt

(Germany)

pain

Salvarsan antimicrobial 1909 Paul Ehrlich

(Germany)

Sahachiro Hata

(Japan)

syphilis

Insulin hormone 1921 Frederick Grant

Banting and

Charles Best

(Canada)

diabetes

Penicillin antibiotic 1928 Alexander

Fleming

(Scotland)

anthrax,

tetanus,

syphilis,

pneumonia

Chlorpromazin

e (Thorazine)

antipsychotic 1950 Paul

Charpentier,

Simone

Courvoisier,

and Pierre

Deniker

(France)

schizophrenia

and bipolar

disorder

Polio vaccine vaccine 1952 Jonas Salk (U.S.)

Albert Sabin

(Poland/U.S.)

poliovirus

Haloperidol

(Haldol)

antipsychotic 1958 Paul Janssen

(Belgium)

schizophrenia,

acute psychosis,

delirium, and

hyperactivity

Expansion of Hospitals and The Joint Commission

The field of surgery expanded in the latter half of the 20th century, increasing the need for

facilities for more advanced procedures. At the same time, a population that had lived and

worked in the countryside shifted to the cities due to the industrialization of work and

improved transportation systems. It was natural for hospitals to grow in such an

environment. The expansion of the hospital system, partly because of capital generated

through Medicare, created new opportunities for physicians, who saw access to these

workplaces as opportunities for greater income.

In addition to large metropolitan entities, ethnic, religious, and specialty hospitals arose to

cater to unique groups of customers. As hospitals developed into larger, more complex

healthcare systems, the hierarchy of the hospital separated into three centers of

authority—trustees, physicians, and administrators.

In 1946, the Hospital Survey and Construction Act (known as the Hill–Burton program)

provided aid to the nation’s community hospitals and later permitted grants for long -term

and ambulatory care facilities. During this same period, the number of hospital beds grew

by about 195,000 at a capital investment of $1.8 billion (annual operating costs), greatly

increasing the nation’s healthcare bill. At the same time, a construction program to expand

the Veterans Administration hospital system was underway.

The Joint Commission on Accreditation of Hospitals (JCAH) was founded in 1951 to

evaluate healthcare organizations and promote hospital reform based on managing

outcomes of patient care. In addition, JCAH established accreditation standards for

hospitals. Members from the American College of Physicians (ACP), the American Hospital

Association (AHA), the AMA, and the American College of Surgeons (ACS) came together to

establish JCAH (renamed Joint Commission on Accreditation of Healthcare Organizations

[JCAHO] in 1987, and again renamed and rebranded The Joint Commission [TJC] in 2007).

Today TJC advocates the use of patient safety measures, the spread of healthcare

information, the measurement of performance, and the introduction of public policy

recommendations (Joint Commission, 2013; Starr, 1982, pp. 348–349, 375–377, 389).

Beginning in the mid-twentieth century, the U.S. government began to take a greater role in

the management of healthcare, and to provide coverage for the elderly (Medicare),

children, and the low income uninsured (Medicaid). Technology improvements, an

expansion of the hospital system, and increased access to care led to rising healthcare

costs. As the government assumed a larger role, cost containment through health planning

and consolidation of resources became major issues. The Health Maintenance Organization

(HMO) Act offered the promise of reducing costs while providing incentives for the

expansion of HMO programs. Despite these changes, healthcare costs continued upward.

Medicare and Medicaid

In 1965 President Lyndon B. Johnson announced his program to create Medicare and to

launch his war on poverty with Medicaid. A number of groups, including labor unions,

liberal political leaders, and activists supported programs that provided greater access to

medical services, a more universal insurance system, comprehensive services, and

expanded medical facilities. These new programs aimed to improve healthcare for the poor

and aged, who at this point had limited access to quality medical care (Starr, 1982).

Courtesy Everett Collection

With the introduction of Medicare and Medicaid, the U.S. government took a greater role in

managing healthcare.

Medicare, under Title XVIII of the Social Security Act, guaranteed access to health insurance

for Americans over 65 years of age regardless of income or medical history. Medicare is a

social insurance program that spreads financial risk across society as opposed to private

insurance that adjusts prices according to perceived risk. Besides coverage for inpatient

and outpatient services, the program has expanded to provide payment for prescription

drugs, to cover younger people who have permanent disabilities, to cover individuals with

end-stage renal disease (ESRD), and to provide for hospice care (Centers for Medicare &

Medicaid Services [CMS], 2013a).

Not surprisingly, the AMA was opposed to these new programs, saying they were a threat

to the doctor–patient relationship. Although there was initial talk of a boycott of Medicare

and Medicaid patients, within a year, the AMA not only accepted Medicare, but also beg an

to profit from the program. However, doctors remained cool to Medicaid because of the

limits on what the program would pay for physician services.

Unlike Medicare, which is a social insurance program, Medicaid is a means-tested, needs-

based social welfare program. It was part of the Social Security Amendment of 1965 (Title

XIX). Funded by both the states and federal government through managed care programs,

it provides healthcare to low income individuals, including children, pregnant women,

parents of eligible children, people with disabilities, and elderly needing nursing home

care. Medicaid is often bundled with other state run programs such as the Children’s Health

Insurance Program (CHIP), Medicaid Drug Rebate Program, and Health Insurance Premium

Payment Program (Medicare.gov, 2013).

The costs of Medicaid and Medicare have increased dramatically since their inception in

1965, and there is much debate concerning their future financial viability without major

reform, as Chapters 3 and 8 discuss.

Comprehensive Health Planning Act

By the 1960s, healthcare was big business. The Hill–Burton program facilitated the

construction of nonprofit hospitals in rural and economically depressed areas, and then

expanded to include grants to long-term care, ambulatory care facilities, and emergency

hospital services. The result of the program was an oversupply of healthcare services

(Starr, 1982, p. 350). Rising healthcare costs and duplication of facilities led to a call for

more long-term planning.

In 1964 President Lyndon Johnson created the President’s Commission on Heart

Disease, Cancer, and Stroke to unite the fields of scientific research, medical education,

and healthcare. Chaired by Dr. Michael DeBakey, the commission recommended that the

federal government commit funds to establish a national network of regional centers. The

commission’s proposal led to the passage of the Comprehensive Health Planning and

Service Act of 1966, also known as the Partnership Health Act, which authorized federal

funding for the National Health Planning and Resource Development agency (Starr, 1982, p.

376). The agency’s mandate included the following objectives:

• To increase access to quality healthcare at a reasonable cost

• To produce uniformly effective methods of delivering healthcare

• To improve the distribution of healthcare facilities and manpower

• To address the uncontrolled inflation of healthcare costs, particularly the costs

associated with hospital stays

• To incentivize the use of alternative levels of healthcare and the substitution of

ambulatory and intermediate care for inpatient hospital care

• To improve the basic knowledge of proper personal healthcare and methods for

effective use of available health services in large segments of the population

• To encourage healthcare providers to play an active role in developing health policy

(Terenzio, 1975)

This strengthening of health planning and consolidation of resources took place at local,

state, regional, and federal levels, but the system was governed locally. The National Health

Planning and Resources Development Act followed in 1974, authorizing state and local

healthcare agencies to conduct long-term health planning activities and Certificate of Need

Programs (CON). Under CON, community needs—instead of consumer demand—

determined the facilities to construct and the new technology to adopt. This program

successfully placed controls on healthcare expansion (Cappucci, 2013).

UNDER THE MICROSCOPE

Environmental Health

Since the 1980s, environmental health has become a major national and international

issue. The field of environmental health is concerned with assessing, controlling, and

promoting the improvement of human health affected by the environment. This includes

housing, urban development, land use, and transportation (World Health Organization

[WHO], 2013a). Considering that between 1940 and 1960, healthcare pro fessionals,

including doctors, regularly appeared in advertisements for tobacco companies, the health

and environmental consciousness of the country has shifted significantly. When children

ingesting lead paint chips showed signs of neurological damage, the CDC voiced concern

over lead paint, as well as other toxic materials, and worked to lower acceptable blood lead

levels in children.

The CDC, other regulatory agencies, and the public have debated the dangers of silicone

implants, tobacco, radiation, vinyl chloride, and global warming, while the press has

elevated these issues into national concerns. Although the public called for more

government action, businesses have complained that the costs of controlling these dangers

outweigh the supposed health benefits (Stevens, Rosenberg, & Burns, 2006).

Health Insurance Becomes a National Concern

Despite legislative attempts to create a national insurance program and the establishment

of the Blue Cross insurance program during the 1930s, health programs to cover the cost of

routine, preventive, and emergency healthcare procedures remained scarce in postwar

America. During World War II, federally imposed price and wage controls made it difficult

for companies to maintain employee loyalty, and the demand for workers was at an all-

time high because of the war effort. At that point, employer-sponsored health insurance

plans, offered to attract workers and maintain loyalty, dramatically expanded (National

Bureau of Economic Research [NBER], 2013). Under President Truman, Congress

attempted to create a public health insurance program in 1943 and again in 1945 with the

introduction of the Wagner-Murray-Dingell bill. In its final form in 1945, this bill proposed

a national social insurance system, which included health insurance, federally subsidized

unemployment insurance, and improved programs for the aged and survivors, all financed

by a single social insurance tax. The program was to be open to all classes of society. In the

end, opposition from the Chamber of Commerce, the American Hospital Association, and

the American Medical Association—who denounced it as socialism—defeated the plan

(Starr, 1982, pp. 280–286).

Private paid and employer-sponsored insurance subsequently grew in popularity, while

the concept of a national insurance system faded from public consciousness. By 1958, 75%

of Americans had some form of health insurance (Walczak Associates, 2013). The poor and

the elderly, however, remained without coverage until the passage of Medicare and

Medicaid in 1965. Although these programs did much to reduce the number of Americans

without insurance, a persistent lack of coverage for many working Americans and the

unemployed compelled politicians to continue to debate national health insurance—now

referred to as universal healthcare. This debate would come to a head in the 1990s

during the Clinton Administration when Congress tried to pass a bill to create “health-

purchasing alliances.” Opposition from the insurance industry, employer organizations, and

the AMA blocked passage of the bill (Hoffman, 2009; Starr, 1995). Two decades would pass

before comprehensive healthcare reform would have its day on Capitol Hill.

Health Maintenance Organization Act

As medical costs continued to rise, industry players discussed ways to contain them. Health

Maintenance Organizations (HMOs), which use doctors as gatekeepers to control access to

medical services, have existed on a limited basis in some form or another since 1910.

Lumber mills offered early HMO-type plans at a cost of $0.50 per month. The Ross-Loos

Medical Group, which evolved into CIGNA Healthcare, provided HMO services to Los

Angeles County and city employees, and later, teachers and telephone workers, eventually

enrolling as many as 35,000 workers (Dill, 2007).

Paul M. Ellwood, Jr., a prominent pediatric neurologist who coined the term health

maintenance organization, approached President Richard Nixon about creating a system of

many competing HMOs that would give consumers a choice among health plans based on

price and quality. The intent of the program was to emphasize prevention and make use of

less expensive procedures. The enactment of the Health Maintenance Organization Act

of 1973 provided grants and loans to plan, start, or expand existing HMOs, removed state-

imposed restrictions on federally certified HMOs, and required companies with 25 or more

employees to offer a federally certified HMO along with indemnity insurance plans

(Stevens, Rosenberg, & Burns, 2006, pp. 317–323). This dual-choice provision gave HMOs

access to the employer-based market for the first time. It also exposed HMOs to state and

federal regulation (O’Rourke, 1974).

The growing influence of corporate medicine and the HMOs divided AMA members. Some

feared the continued loss of autonomy that increased with the arrival of the HMO-inspired

medical–industrial complex, while others wanted to buy into this new business-focused

approach to healthcare. At the time, the AMA “officially” rejected any form of corporate

practice of medicine and argued that HMOs do not provide enough treatment support. They

fought to keep professional services separate from facility/organizational services in order

to protect the profession. Since then, the AMA has realized the value of HMOs and has

lessened its resistance to managed care (Sherburne, 1992).

Whether HMOs actually reduce the cost of healthcare is debatable. Some studies show no

detectable differences in cost, while others suggest that some of the apparent savings are

due to cost shifting (Physicians for a National Health Program, 2000; Shin & Moon, 2007).

Legislative attempts to control costs

The clash between corporate-run healthcare and the government’s attempts to legislate

medical care led to rapid healthcare inflation in the 1970s. The need for more regulation

and reform—a result of slow economic growth—coupled with persistent general inflation

reduced consumer demand for more medical care. The passage of Medicare and Medicaid

ensured that the government was the major buyer of healthcare services. Government

officials, while alarmed by the escalating cost of entitlements (e.g., Social Security and

Medicare) simultaneously criticized the lack of access to medical care for certain segments

of the population. Again, national health insurance surfaced as a potential cost solution,

along with the public’s perception of healthcare as a right rather than a privilege.

Legislatively, the government tiptoed around healthcare reform, but no meaningful effort

to reduce costs materialized.

In 1970, Senator Edward Kennedy introduced legislation calling for the replacement of

public and private insurance plans by a single, federally operated health insurance system.

In opposition to this legislation, President Nixon announced a new national health strategy,

of which the Health Maintenance Organization Act was one part, in the hope of containing

still-rising healthcare costs. Nixon’s strategy also included a federally run Family Health

Insurance program for low income families, cutbacks in Medicare, an increased supply of

physicians, and capitation to medical schools for increasing enrollment. Doctors’ fees

would be limited to annual increases of 2.5% and hospital charges to increases of 6%. New

health planning agencies, also aimed at cost containment, were established after the

passage of the National Health Planning and Resource Development Act. Watergate

intervened, however, preventing Nixon from advancing his national health insurance

program (Starr, 1982, pp. 393–404).

Healthcare costs continued upward. As a percentage of the federal budget, healthcare

expenditures increased substantially, from 4.4% in 1965 to 11.3% in 1973 (U.S. Public

Health Services, 1981).

By the 1980s, the day of the country doctor or sole practitioner—along with house calls—

was over. The era of big corporate medicine had arrived, and even stand-alone hospitals

were disappearing as large corporate networks came to define medicine. Large

government healthcare programs competed with privately funded institutions, insurance

programs, and integrated hospital systems. The cost of U.S. healthcare was rising faster

than any other commodity. At the beginning of the 1980s, it was hard to envision that a

major expansion of government-funded healthcare was just a few decades away.

Arrival of Corporate Care

Significant changes were underway in the structure of healthcare by the 1980s, a time often

referred to as the age of privatization. The government redirected its policies toward

privatizing publicly funded institutions, and hospital systems were integrated into larger

healthcare networks, which included ancillary healthcare-related businesses. Marketing

practices, including direct-to-consumer advertising for pharmaceuticals and medical

devices, increased significantly. In addition, contracting work to third parties and

government deregulation were becoming standard procedures. All these trends, closely

associated with the recent globalization of financial capital, found their way to the

healthcare industry.

Lee Lorenz/The New Yorker Collection/www.cartoonbank.com

“Yeah, they’re slow, but we’re saving a bundle on health care.”

Private insurance coverage from large corporations increased from 12% of all insured in

1981 to 80% of the 200 million covered in 1999. Cost containment provided the rationale

for the corporate takeover of the health industry. At the same time, major breakthroughs

were occurring in medical science and technology, and the doctor–patient relationship was

changing; patients demanded more personal healthcare and more say in the healthcare

decision process.

Meanwhile, the focus on sickness-based medicine was shifting toward preventive health,

and the U.S. population was aging.

During the administration of President Ronald Reagan, reimbursements for Medicare

changed from a cost-based system to a diagnosis-determined (diagnosis-related group or

DRG) system. Unlike the cost-based system, which allows healthcare providers to receive

remuneration according to the costs they incur, DRGs are a way of classifying patients by

diagnosis, average length of hospital stay, and therapy received. The results determine how

much money healthcare providers will be given to cover future procedures and services,

primarily for inpatient care.

This shift aimed to create a more precise patient classification system, as well as set

standards for the use of hospital resources, which would result in more accountability.

Private insurers quickly adopted the same system. Congress also redirected the

government’s resources toward assisting and supporting the private sector rather than

competing with it. In essence, the government began to collaborate with businesses

through government-backed financing, special tax breaks, loans, grants, mixed boards of

directors (director boards made up of individuals from the government and business

sectors), and sovereign investment funds (investment funds provided by the government).

Both government and private enterprise were looking for solutions to the problems of

rising healthcare costs, as well as ways to provide access to the uninsured and under-

insured. Doctors—swept into the corporate system—sacrificed both autonomy and the

ability to provide charity care. Capitation payments to doctors became more common (see

box below). By 2010, 44 million Americans, nearly a fifth of the population, had no health

insurance at all. Meanwhile healthcare costs rose at double the rate of inflation. By the end

of 2010, healthcare consumed 17.9% of the gross national product (Collyer & White, 2011;

Lown, 2007; PBS, 2013; Starr, 1982, pp. 420–449).

UNDER THE MICROSCOPE

Capitation: Risk Management for Health Providers

Capitation payments, in which providers accept a fixed payment per year to cover an

agreed-upon list of services for each HMO patient assigned to them, are a means for

healthcare providers to manage risk and payment. Provisions from the 1973 Health

Maintenance Organization Act helped define capitation payments, which rewarded

recipients and physicians for reducing costs (Miller, 2009).

With capitation payments, physicians are encouraged to keep costs down, as they face

financial penalties for over-utilizing healthcare. At the same time, however, they must

maintain a pre-established standard of care. Capitation offers providers a powerful

incentive to avoid the most costly patients. At the beginning of the year, a risk pool is

established. If the plan does well financially, the physician shares in the profits, and if it

does poorly, pool money is used to cover the deficits. This plan works best for larger

practices as they can manage risk more easily than sole practitioners or small offices

(Alguire,

n.d.).

An Era of Oversight and Reform

As the healthcare industry entered an era of big business in the 1980s, the U.S. government

sought to establish a larger number of policies that would ensure quality of care, protect

patient privacy, and contain costs—increasingly elusive goals. The U.S. government enacted

a series of legislative measures to address the most urgent needs of an ailing system:

quality of care and access to it.

Omnibus Budget Reconciliation Act (OBRA)

Growing concerns about the quality of nursing home care in the 1980s led the Institute of

Medicine to undertake a study of how best to regulate the level of care in the nation’s

Medicaid- and Medicare-certified nursing homes. Their report, Improving the Quality of

Care in Nursing Homes, laid the foundation for the Nursing Home Reform Act, part of the

Omnibus Budget Reconciliation Act (OBRA) of 1987. This act set national minimum

standards of care and rights for people living in certified long-term care facilities. The act

emphasized quality of life as well as quality of care; advocated patients’ rights; set uniform

certification standards for Medicare and Medicaid homes; created new rules for training

and testing of para-professional staff; and required more interaction between state

inspectors, residents, and family members during annual inspections.

The passage of OBRA of 1987 resulted in significant improvements in the quality of patient

care and quality of life, along with comprehensive care planning for nursing homes and

residents. Anti-psychotic drug use declined by 28-36% and physical restraint use was

reduced by approximately 40% in these facilities after OBRA (Turnham, 2013).

Follow-on legislation in the form of the OBRA Act of 1990 established pharmacy and

therapeutics committees; boards to manage a state’s purchase of drugs and formulary

decisions for Medicaid; and benefits programs for injured workers and state employees

(Tax Policy Center, 2010).

Prescription Drug User Fee Act (PDUFA)

The advent of the AIDS virus in the early 1980s brought to light another healthcare issue:

prescription drug testing and availability. Although AIDS activists were the most vocal in

their criticism of the FDA timeline for drug approval, they were far from the only unhappy

group. Consumers, the pharmaceutical and device industries, and the FDA itself all felt that

drug approvals took too long. Companies complained that the mean 31.1-month timeline

for approval reduced the time needed to recoup the costs of research and development. In

desperation, terminally ill patients bought drugs that had stalled in the FDA pipeline in

foreign countries. When Congress ignored FDA requests for added resources, the agency

suggested charging an application fee to pharmaceutical companies to speed up the

approval process (Thaul, 2008).

The result was the Prescription Drug User Fee Act (PDUFA), passed by Congress in 1992.

PDUFA allowed the FDA to collect substantial fees from pharmaceutical companies in

exchange for a faster regulatory review of new products. Following the measure’s

enactment, the FDA inbox of HIV/AIDS drugs quickly emptied, and the agency introduced

expedited approval of drugs for life-threatening diseases and expanded pre-approval

access to drugs for patients with limited treatment options (U.S. Food and Drug

Administration [FDA], 2013a). PDUFA funds enabled the FDA to increase the number of

new drug reviewers by 77%. The median time for nonpriority new drug approval

decreased from 27 months to 14 months, with the goal being one year. The probability of a

new drug being launched first in the United States increased by 31% at the end of PDUFA I

and by 27% by the end of PDUFA II. The average number of new drugs approved each year

under PDUFA increased by one-third. User fees now cover roughly 65% of the drug

approval process (Cantor, 1997; Cox, 1997; Olson, 2009).

Health Insurance Portability and Accountability Act (HIPAA)

As healthcare became increasingly the domain of large corporations, patients often found

themselves at the mercy of impersonal bureaucracies. Patient privacy became a significant

issue in healthcare, and in 1996, the federal government responded with the passage of the

Health Insurance Portability and Accountability Act (HIPAA). The primary goal of HIPAA is

to make it easier for people to keep health insurance, protect the confidentiality and

security of healthcare information, and help the healthcare industry control administrative

costs. Title I of HIPAA limits restrictions that a group health plan can place on benefits for

preexisting conditions. Individuals with previous coverage may reduce the normal 12–18

month exclusion period by the amount of time that they had “creditable coverage” prior to

enrolling in the plan (Legal Information Institute, 2013).

In enacting Title II of HIPAA, Congress mandated the establishment of federal standards for

the privacy of personal health information. These new safeguards protect the security and

confidentiality of personal information that moves across hospitals, doctors’ offices,

insurers or third party payers, and state lines, as well as how the information is stored in

computers and transmitted electronically. Patient consent is required before sharing any

protected health information with any organization or individual (Geomar Computers,

n.d.).

The Department of Health and Human Services is in charge of health information security

and can levy stiff penalties for noncompliance. Critics have argued that while protecting

patients and giving patients better access to their health records, HIPAA places an added

burden on healthcare professionals for keeping medical record information secure, raises

maintenance costs, and increases the time needed for educating employees and patients.

(Chapter 10 discusses some of the challenges to health organizations stemming from

HIPAA.) Unresolved issues remain, including HIPAA’s effects on homeland security and

disaster planning, the need for a unique patient identifier, and the impact on research

initiatives (Harman, 2005).

Children’s Health Insurance Program (CHIP)

First proposed by Democratic Senator Edward Kennedy of Massachusetts in 1997, the State

Children’s Health Insurance Program (SCHIP), later called the Children’s Health Insurance

Program (CHIP), was the largest expansion of taxpayer-funded health insurance coverage

for children in the United States since Medicaid (Pear, 1997). CHIP is a partnership

between federal and state governments offering coverage for children of the working poor

with family incomes between 160% (North Dakota) to 400% (New York) of the poverty

level. The program, administered by the U.S. Department of Health and Human Services,

provides matching funds to states from taxes on cigarettes. President Clinton signed the bill

authorizing CHIP into law as part of the Balanced Budget Act of 1997 (HHS, 1998).

Although funded by the federal government, states design and run their own CHIP

programs. Each state can employ a great deal of flexibility in eligibility and enrollment

requirements, and some states even use private insurance companies to run them. The

Children’s Health Insurance Reauthorization Act of 2009 expanded the program by adding

an additional $32.8 billion to expand coverage to an additional four million children and

pregnant women and coverage for legal immigrants, without a waiting period. Detractors

argue that the program is costly, adding $40 billion to the government’s healthcare debt.

https://content.ashford.edu/books/Batnitzky.5231.18.1/sections/sec10.1#sec10.1

It also costs the states more because children who leave the CHIP’s program for whatever

reason and forgo the preventive care provided by CHIP, often end up in hospital emergency

rooms for more costly care. Moreover, for every 100 children who gain CHIP coverage,

between 24 and 50 individuals drop private coverage, so critics accuse public programs of

crowding out private insurers (Cannon, 2007; Rimsza, Butler, & Johnson, 2007).

Direct-to-Consumer Advertising

The authority to approve pharmaceutical products for marketing has been the domain of

the FDA since its creation in 1938. In 1962, these responsibilities expanded to include the

regulation of prescription drug labeling and advertising. In 1969, the FDA issued

regulations to guide the truthfulness and fairness of such advertising. That system

remained standard until 1981, when Merck ran the first direct-to-consumer (DTC) print

advertisement in Reader’s Digest for Pneumovax®, a vaccine to prevent infection with

pneumococcal bacteria, which can cause middle ear infections, pneumonia, meningitis

(inflammation of the lining around the brain), and septicemia (blood poisoning). In 1983,

Boots Pharmaceuticals ran the first broadcast advertisement promoting the lower price of

its prescription brand of ibuprofen (Rufen) compared with Motrin (McNeil Consumer)

(Greene & Herzberg, 2010; Ventola, 2011). Branded drug advertising subsequently

exploded in print, radio, and television. Fearing that misleading or confusing

advertisements would harm consumers, doctors and the AMA pressured the FDA to put a

moratorium on all DTC drug advertising. In spite of these efforts, the FDA permitted

broadcast ads for DTC advertising (provided the ad met minimum standards for risk

information), and by 1999, Americans were exposed to nine prescription drug

advertisements on television every day.

Spending on DTC advertising increased from $220 million in 1997 to over $2.8 billion in

2002. In 2005, drug companies spent $4.2 billion on consumer ads, $7.2 billion promoting

drugs to physicians, and $31.4 billion on research and development. The cost of such

advertising has led to a 34.2% rise in the cost of prescription drugs, compared with a 5.1%

increase for other prescriptions not advertised in the media (“Direct-to-consumer,” 2013).

Medicare Comes of Age

The late 1990s saw a push to modernize Medicare, with emphasis on reforming the

contractor system— the system of intermediaries through which providers submit their

claims to the government. The goals were to “integrate claims processing activities,

improve customer service and operations, reduce claims processing error rates, and

implement new information technology to modernize and update antiquated financial

management and fragmented accounting systems” (Foote, 2007, p. 74). In addition to

Medicare Part A (pays for hospital costs) and Part B (pays for outpatient costs), Part C

(Medicare Advantage) was added. Medicare Advantage provided Medicare benefits to

beneficiaries who enroll in plans offered by private health insurance organizations.

Medicare Advantage provided a wide range of private plans to compete with Medicare, as

well as billions of dollars in subsidies to insurance companies and health maintenance

organizations. By 2009, 23% of the total Medicare population had enrolled in Medicare

Advantage plans.

In the 1990s, prescription drug coverage was the focus of the effort to bring Medicare up to

date. When President Clinton put the issue on the political agenda in his 1999 State of the

Union speech, politicians battled over who would be the first party to add an entitlement

benefit for prescription drugs as part of the Medicare Prescription Drug, Improvement, and

Modernization Act.

At its inception in 1965, Medicare did not include prescription drugs among its benefits, as

drugs were fewer in number and less clinically effective. By 2000, however, concern for the

price of prescription drugs and the need for senior coverage began to receive media

attention. A small industry sprang up to fulfill this need. Medicare patients are responsible

for paying their own drug costs when they enter the gap or donut hole, which is the area

between the initial coverage limit and the catastrophic-coverage threshold.

By the late 1990s, talk of budget-surplus projections made the addition of drug benefits

seem more affordable. In addition, the older population had begun to depend on these

treatments for a variety of acute and chronic ailments, but the cost was becoming a burden.

Meanwhile, Republicans hoped to contain Medicare costs by pushing more Medicare users

into HMOs. Some viewed prescription drug coverage as a means to achieve larger, market-

oriented health reform; others saw covering only those who joined HMOs as a path to

reform. However, both HMOs and large employers were cutting back their drug benefit

plans, and HMOs were losing popularity. Despite efforts to make Medicare more like

private health insurance by adding prescription drugs to the plan, the effort failed.

The lack of a prescription drug benefit as part of Medicare left many seniors vulnerable,

and in 2003, President George W. Bush signed into law the largest Medicare expansion in

the history of the program. The Medicare Prescription Drug, Improvement, and

Modernization Act created a new prescription drug benefit and enacted other important

changes to Medicare. The bill’s provisions

• prevented large companies from eliminating private prescription coverage to

retirees,

• prohibited the federal government from negotiating discounts with drug companies,

and

• prohibited the government—but not private providers—from establishing a drug

formulary, a health plan’s list of preferred generic and brand name prescription

drugs.

Applewhite/Associated Press

The Patient Protection and Affordable Care Act, signed by President Obama on March 23, 2010, is

the most significant government expansion and regulatory overhaul of healthcare since the passage

of Medicaid and Medicare.

Known as Part D, the prescription drug benefit was initially estimated to cost $400 billion

between 2004 and 2013. As of February 2005, the estimated ten-year cost had risen to

$724 billion despite being an optional part of Medicare. The AMA, AHA, and American

Association of Health Plans supported the passage of the bill, as did the pharmaceutical

industry, although it insisted that no price controls be adopted as part of the plan

(Campbell & Morgan, 2005).

A Healthcare Watershed: The Patient Protection and Affordable Care

Act (PPACA)

With the arrival of the 21st century, staggering health costs and lack of patient insurance

had become an overwhelming priority for policy makers and the public alike. Republicans

favored more marketization, or even privatization, of federal entitlements for the

healthcare system (Marmor, 2000). While campaigning for the presidency in 2007, Senator

Barack Obama of Illinois announced plans for a “public option,” a government insurance

program that would compete with private healthcare. Upon his 2008 election to the

presidency, President Obama made healthcare reform one of his highest priorities, a

challenge which nearly every president and Congress, whether Democrat or Republican,

has attempted to meet in some way.

On March 23, 2010, President Obama signed the Patient Protection and Affordable Care Act

(PPACA) into law. Better known as the Affordable Care Act (ACA) and nicknamed

“Obamacare,” it was the most significant government expansion and regulatory overhaul of

healthcare since the 1965 passage of Medicaid and Medicare. The ACA mandated health

insurance coverage for 35–45 million uninsured individuals and aimed to correct some of

the worst practices of the insurance companies, including screening for preexisting

conditions and loading of premiums; policy rescinds on technicalities when illness seemed